📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Imagine a machine learning model so powerful, it could train a computer to master video games from scratch. Welcome to the world of Deep Q-Networks (DQN), where machines not only learn from their mistakes, but remember and adapt!”

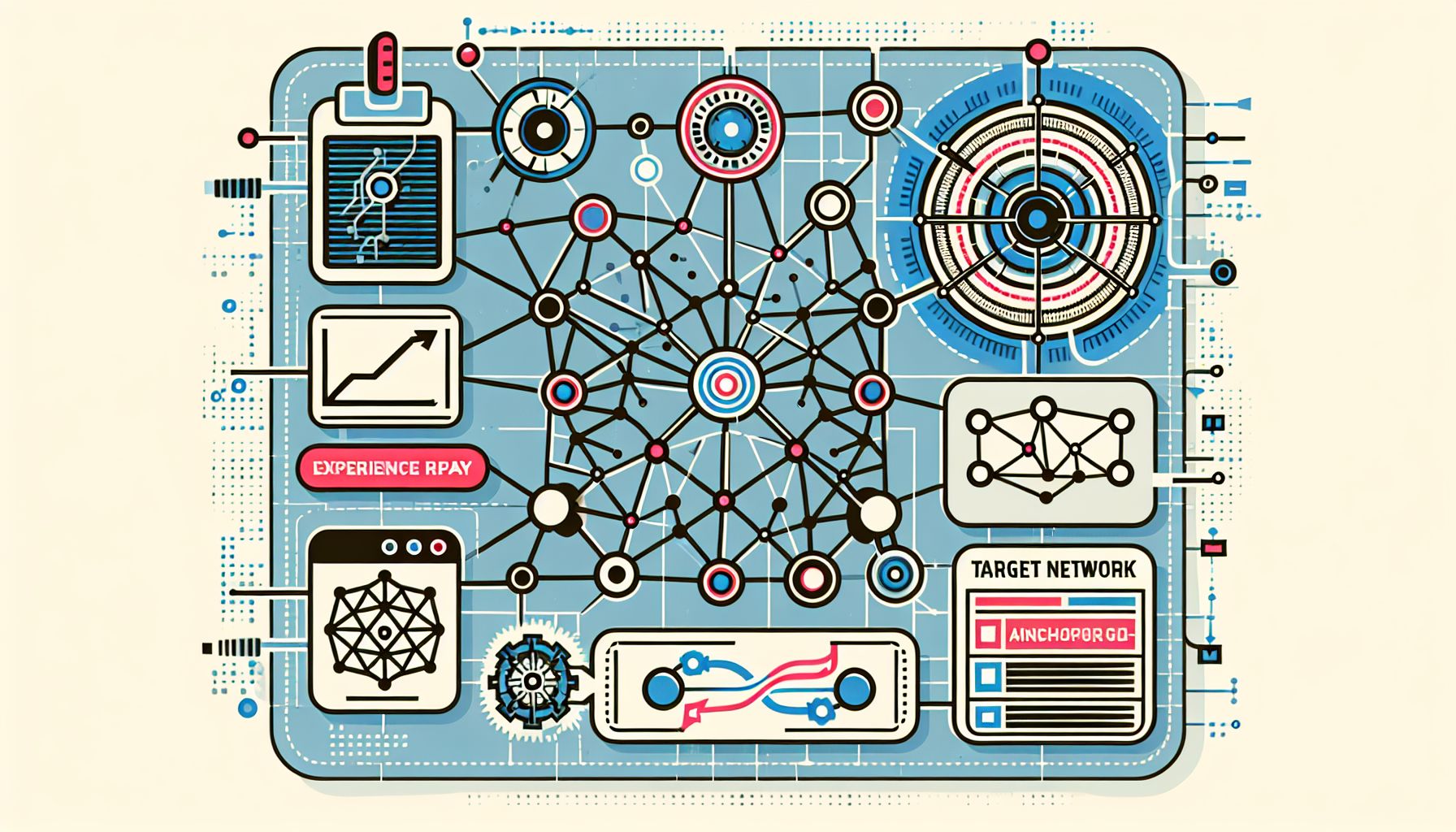

Hello there, fellow AI enthusiasts!👋 Today, we’re going to take a thrilling plunge into the depths of the Deep Q-Network (DQN) algorithm. This isn’t just your run-of-the-mill Q-learning algorithm; it’s Q-learning on steroids! 💪 If that isn’t enough, we’re also adding a pinch of Experience Replay and a dash of Target Network to our AI cocktail.🍹 Sounds exciting? Let’s dive right in! Reinforcement Learning (RL) and Deep Learning (DL) are two of the most powerful tools in the AI toolbox. But what happens when you combine them? You get Deep Q-Networks, an algorithm that fuses the best of RL and DL, making it a formidable force in the AI world. DQN, armed with features like Experience Replay and Target Networks, has been making waves, and it’s time we understand why.

🧠 Understanding Deep Q-Networks (DQN)

"Journeying Through the Neural Pathways of DQN"

Deep Q-🧩 As for Networks, they’re a type of Q-Learning algorithm, but they have an ace up their sleeve – they use a Neural Network to estimate the Q-value function. The “Deep” in DQN is because of the use of Deep Neural Networks. As we know, Neural 🧩 As for Networks, they’re excellent function approximators, which makes them perfect for this job. In traditional Q-Learning, we maintain a table (Q-table) to store the Q-values for each state-action pair. However, when the number of states and actions gets large (think of a game like chess or Go), maintaining a table becomes practically impossible. 🔍 Interestingly, where DQN shines. It uses a Neural Network to approximate the Q-value function, allowing it to handle problems with a large number of states and actions. However, directly applying Neural Networks to Q-Learning can lead to instability and divergence. So, we use two key techniques to overcome these issues – Experience Replay and Target Network.

🔄 Experience Replay

Imagine trying to learn to play a new sport, but you only get to play the game once, and your performance in that one game decides your skill level. Doesn’t sound fair, right? It’s the same with our DQN. If we feed it sequential batches of experiences, it might not learn optimally. 🔍 Interestingly, where Experience Replay comes to the rescue.

Experience Replay involves storing the agent’s experiences at each time step in a data set called the replay buffer. This stored experience is a tuple of the state, action, reward, next state, and done flag (s, a, r, s', d). Instead of learning from consecutive batches of experiences, the agent randomly samples a batch of experiences from the replay buffer to learn from. This random sampling breaks the correlation between consecutive experiences, leading to a more stable learning process.

Here’s a quick Pythonic pseudo-code for Experience Replay:

# Initialize replay memory D to capacity N

replay_buffer = deque(maxlen=N)

# Store transition (s, a, r, s', d) in memory D

replay_buffer.append((s, a, r, s', d))

# Sample random mini-batch from D

mini_batch = random.sample(replay_buffer, batch_size)

# Learn from the mini-batch

learn(mini_batch)

🎯 Target Network

If you’ve ever tried shooting at a moving target, you know how difficult it is to aim accurately. In DQN, we encounter a similar problem. The network is constantly updating and changing, making it a moving target. This can lead to unstable learning and oscillation. Enter the Target Network. Target 🧠 Think of Network as a clone of our original network, but with a twist - it’s not updated as frequently as the original network. Instead, it’s updated periodically, either by copying the weights from the original network or by a soft update method. This periodic update makes the target network relatively stable, providing a fixed target for our DQN to aim at.

Here’s a Pythonic pseudo-code for the Target Network:

# Initialize target network with same weights as original network

target_network = copy.deepcopy(network)

# Every C steps reset target network to be same as original network

if steps % C == 0:

target_network = copy.deepcopy(network)

🎮 DQN in Action: Playing Atari Games

In 2015, Google’s DeepMind demonstrated the power of DQN by teaching it to play seven Atari 2600 games. The DQN was only given the pixels and the game score as input and was not told anything about the rules of the games. Despite this, DQN managed to outperform humans in three of the seven games and achieved more than 75% of the human score in the rest. This was a significant achievement and demonstrated the potential of DQN in handling high-dimensional sensory inputs, something traditional RL algorithms struggled with. It also opened the gates for more complex games and problems to be tackled using DQN and its variants.

🧭 Conclusion

Deep Q-Networks, with their potent combination of Deep Learning and Reinforcement Learning, have become a cornerstone in the field of AI. The addition of Experience Replay and Target Network solves the instability and divergence issues, making DQN a powerful and stable learning algorithm. We’ve only scratched the surface of the immense potential DQN possesses. As we continue to push the boundaries of AI, DQN and its variants will play an essential role in tackling increasingly complex problems. So, the next time you’re lost in the vast maze of AI algorithms, remember, DQN might just be the torchlight you need to navigate your way! 🗺💡

🌐 Thanks for reading — more tech trends coming soon!

🔗 Related Articles

- Decision Trees and Random Forests, How decision trees work (Gini, Entropy), Pros and cons of trees, Ensemble learning with Random Forest, Hands-on with scikit-learn

- Support Vector Machines (SVM), Intuition and geometric interpretation, Linear vs non-linear SVM, Kernel trick explained, Implementation with scikit-learn

- Combining Clustering with Deep Learning Models (Deep Embedded Clustering)