📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Ever wonder what makes Google Translate so accurate or powers the genius behind chatbots and voice assistants? Welcome to the complex yet captivating world of the Transformer model!”

Let’s face it - Deep learning can be a bit of a beast. With its complex algorithms and intricate models, it’s enough to send even the most seasoned data scientist into a dizzying array of mathematical equations. But here’s the good news: the Transformer model, one of the most influential models in Natural Language Processing (NLP), isn’t as intimidating as it might initially seem. Actually, it’s pretty cool once you get to know it. In this post, we’ll break down the architecture of the Transformer model, in a way that’s digestible even if you’re just starting with machine learning. We’ll dive into its components, explore how they interact, and even take a peek at why it’s such a game-changer in the world of NLP. So buckle up, sip your favorite coffee ☕, and let’s get started!

🎯 The Basic Concept: What is the Transformer Model?

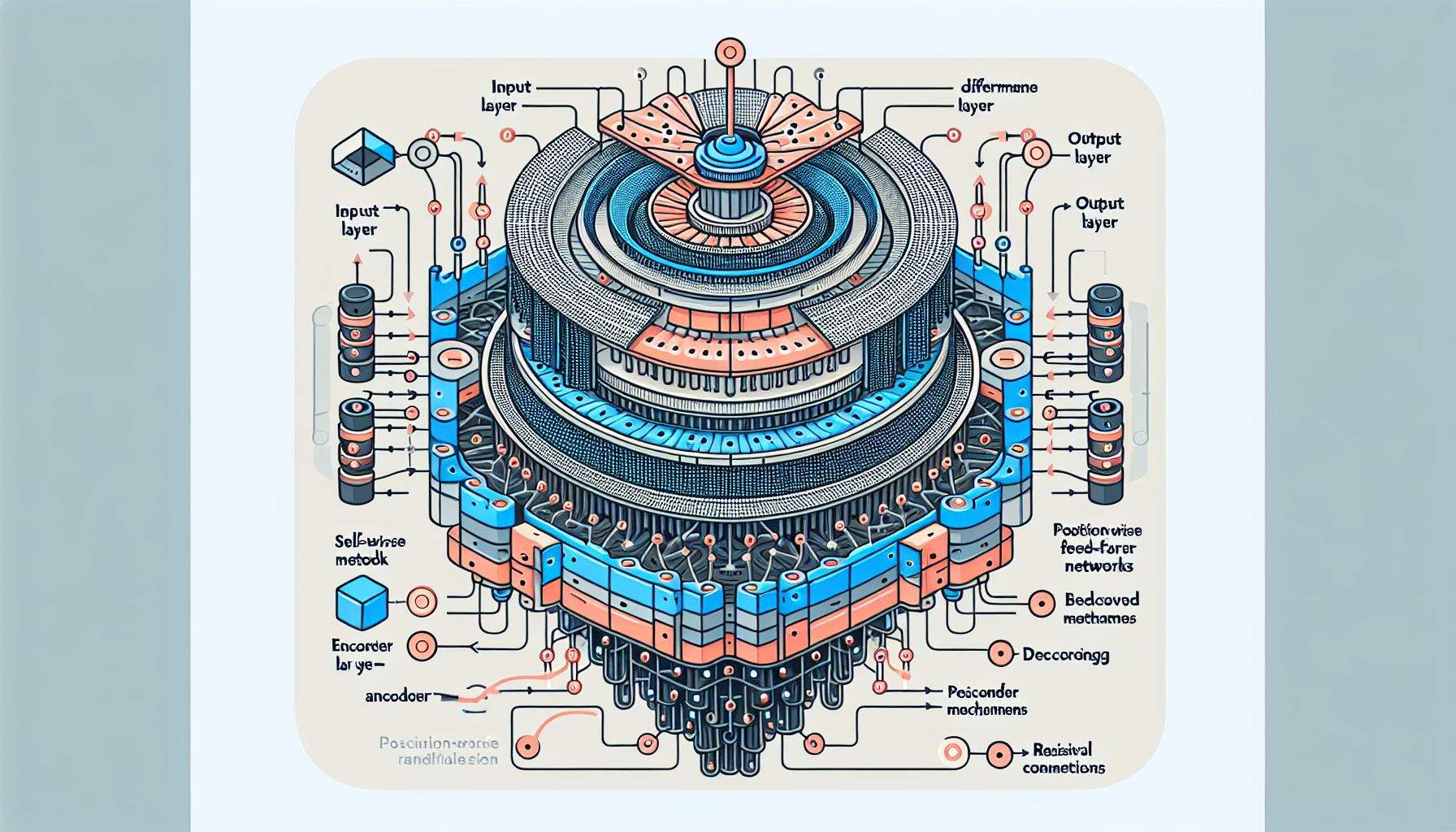

"Deconstructing the Blueprint of a Transformer Model."

Let’s start with the basics. The Transformer model, introduced by Vaswani et al. in a 2017 paper titled ‘Attention is All You Need’, is a type of model used for processing sequential data. It’s most commonly associated with NLP tasks like translation, summarization, and sentiment analysis. The Transformer model’s claim to fame is its use of attention mechanisms, which allow it to focus on different parts of the input sequence when generating an output sequence. 🔍 Interestingly, a big deal, because it means the model can capture long-distance dependencies between words in a sentence, something that was a real challenge for previous models. Let’s say we have a sentence like “The cat, which had been missing for days, finally came home.” Traditional models might have trouble understanding that “came home” refers to “the cat”, especially if there were many words in between. The Transformer model, on the other hand, uses its attention mechanism to link “came home” directly with “the cat”, no matter how far apart they are in the sentence. It’s like a superpower for dealing with context!

🔍 Inside the Transformer: The Architecture

Now that we have a basic understanding of what the Transformer model is, let’s dive deeper into its architecture. The Transformer model is essentially made up of two parts: the Encoder and the Decoder. Each of these parts is a stack of identical layers, but they perform different tasks in the sequence-to-sequence process.

Encoder

The Encoder’s job is to take the input sequence (like a sentence in English) and turn it into a series of vectors, which are numerical representations of the words. This is done in two main steps:

**Self-Attention

** 🔍 Interestingly, where the Transformer’s attention mechanism comes into play. The Encoder takes each word in the input sequence and calculates a score, which reflects how much attention each word should pay to every other word in the sequence. This helps the model to understand the context.

**Feed-Forward Neural Network

** After the self-attention step, each word’s vector goes through a simple feed-forward neural network (that’s just a fancy way of saying it has layers of neurons that pass information forward without looping back). This step helps to detect patterns in the sequence. Each layer of the Encoder performs these steps independently, meaning each layer learns different things about the input sequence. The outputs from all these layers are then summed up to form the final output of the Encoder.

Decoder

Once the Encoder has processed the input sequence, it’s time for the Decoder to take over. The Decoder also consists of layers, and its main job is to turn the vectors back into a sequence (like a sentence in French). This is done in three main steps:

**Masked Self-Attention

** Like the Encoder, the Decoder uses self-attention. However, to prevent the Decoder from “cheating” and seeing future words in the output sequence, a mask is applied to ensure that the attention score is zero for all positions in the sequence after the current position.

**Encoder-Decoder Attention

** This step helps the Decoder to focus on relevant parts of the input sequence. It’s similar to the Encoder’s self-attention step, but instead of looking at its own input sequence, the Decoder looks at the output sequence from the Encoder.

**Feed-Forward Neural Network

** The final step is the same as in the Encoder. Each word’s vector goes through a feed-forward neural network to detect patterns and produce the final output sequence. Now, that’s a lot of attention, isn’t it? But it’s precisely this mechanism that allows the Transformer model to understand the context and relationships between words, even in really long sentences.

🚀 Why the Transformer Model is a Game-Changer

The Transformer model is a big deal in NLP for a few reasons:

**It handles long sequences

** Thanks to its attention mechanism, the Transformer model can handle sequences with thousands of elements, making it much more effective for tasks like translation and summarization.

**It’s parallelizable

** Unlike Recurrent Neural Networks (RNNs), which process sequences one element at a time, the Transformer can process all elements in a sequence at once. This makes it much faster and more efficient.

**It’s a foundation for other models

** The Transformer model serves as the foundation for several other influential models in NLP, such as BERT, GPT-2, and T5. These models have pushed the boundaries of what’s possible in tasks like language generation and question answering.

🧭 Conclusion

The Transformer model is a fascinating piece of architecture that has significantly shaped the field of NLP. Its attention mechanism enables it to understand context and dependencies in a way that was previously challenging, making it a powerful tool for many applications. Understanding the Transformer model isn’t a walk in the park, but hopefully, this post has helped you get a grip on its architecture and why it’s such a big deal. Remember, as with anything in deep learning, the key is to keep exploring, keep experimenting, and keep learning! Whether you’re a complete beginner or an experienced data scientist, getting to know the Transformer model is definitely a journey worth embarking upon. So put on your explorer’s hat, and dive into the world of Transformer models. Happy learning!

🚀 Curious about the future? Stick around for more discoveries ahead!