📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Imagine if Pac-Man could teach itself to gobble up those dots without your help? Dive into the world of reinforcement learning, where algorithms learn and adapt, guided by the Gridworld Problem!”

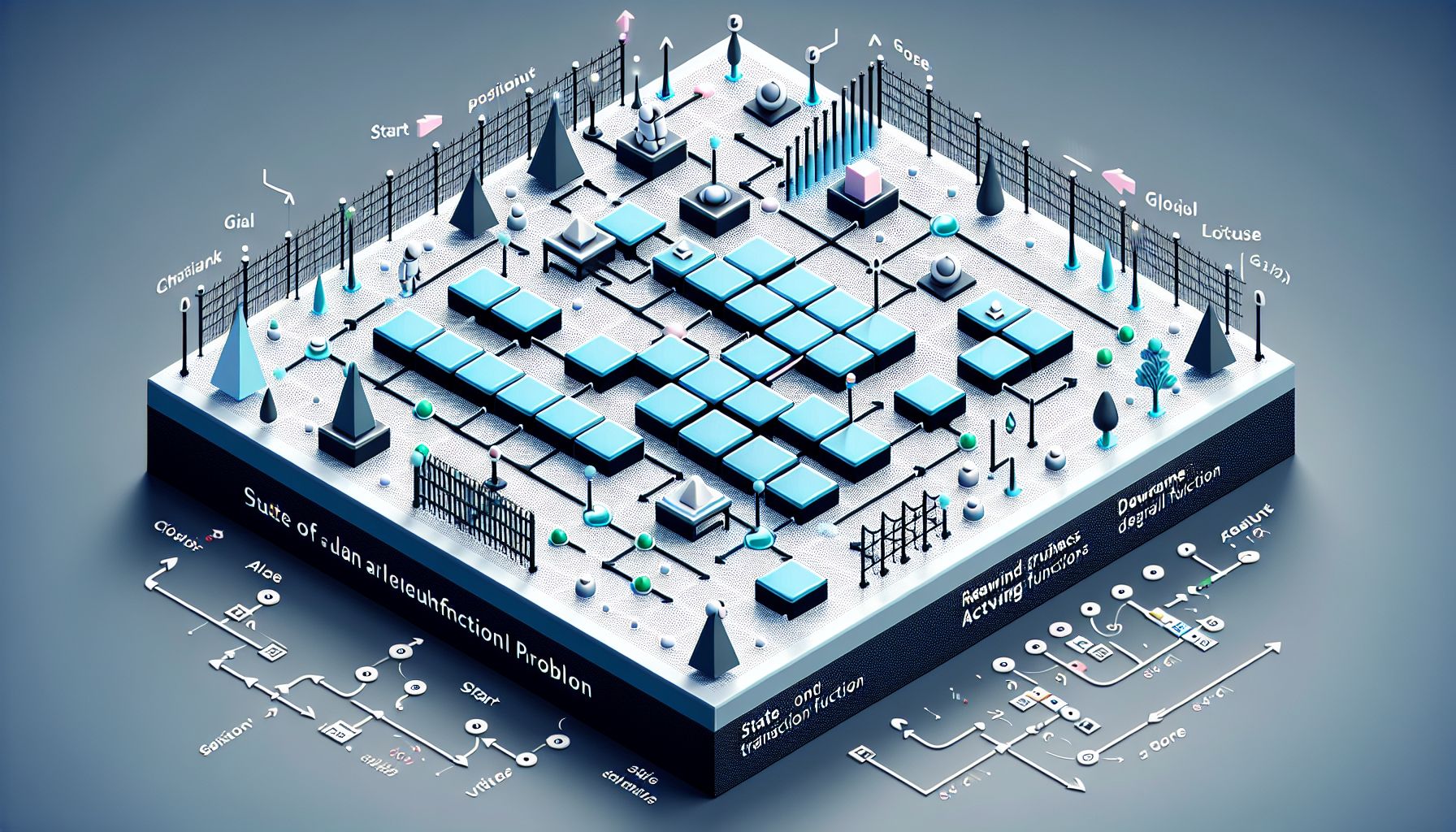

Welcome, adventurers! Today, we’re embarking on a thrilling journey through the labyrinth of reinforcement learning, guided by the ever-enlightening torch of the Gridworld problem. Whether you’re a seasoned reinforcement learning warrior or a novice apprentice, Gridworld offers a fundamental understanding of this complex domain. So, fasten your seatbelts as we delve into the mysteries of agents, states, actions, and rewards. Reinforcement learning (RL) is a dynamic field of machine learning where an agent learns to make decisions by interacting with its environment. It’s like teaching a robotic dog to fetch a ball—you reward it when it does well (brings the ball back) and don’t when it doesn’t (chases its tail instead). Over time, the dog learns the optimal behavior (fetching the ball) by associating it with the positive reward. This post will use the Gridworld problem to illustrate these basic concepts of reinforcement learning. We’ll explore the mechanics of the agent’s decision-making process, understand how it navigates through the grid to reach its goal, and learn how reinforcement learning algorithms can help the agent improve its performance. So let’s dive in!

🧩 Understanding the Gridworld Problem

"Cracking the Gridworld: A Reinforcement Learning Journey"

🧠 Think of Gridworld as a simple, yet powerful, problem used to illustrate the basics of RL. Picture a grid, similar to a chess board, with each square representing a state. An agent (let’s say our robotic dog 🐶) starts at one square and must find its way to the goal square, possibly avoiding some obstacle squares. The agent can move in four directions—up, down, left, or right. Each move is an action, and moving from one square to another transitions the agent to a new state. The agent gets a reward (or penalty) for each action, and it learns over time to make actions that maximize its total reward. In the Gridworld problem, the agent’s goal is to find the shortest path to the goal square while avoiding the obstacles. 🔍 Interestingly, a typical example of a Markov Decision Process (MDP), a mathematical model often used in reinforcement learning to describe an environment for a learning agent.

📚 Reinforcement Learning Concepts in Gridworld

There are several key concepts in reinforcement learning that are illustrated by the Gridworld problem:

State

In Gridworld, the state is the current location of the agent on the grid.

Action

The agent can choose to move up, down, left, or right. Each of these movements is an action.

Reward

The agent receives a reward after every action. In Gridworld, the agent might receive a positive reward for reaching the goal, a smaller positive reward for each move that brings it closer to the goal, and a negative reward for hitting an obstacle or moving away from the goal.

Policy

A policy is a strategy that the agent follows while deciding on an action based on the current state. In other words, it’s the agent’s plan of action.

Value Function

The value function defines how good a particular state or action is for an agent in terms of expected future rewards. The agent uses this function to choose its actions.

🤖 Training the Agent: The Bellman Equation

Now that we understand the Gridworld problem and the key concepts of RL, let’s see how the agent learns to navigate the grid. The agent learns through a process called value iteration, which is based on the Bellman Equation. The Bellman 🧠 Think of Equation as a recursive formula that calculates the value of a state based on the values of the neighboring states. It’s named after Richard Bellman, a brilliant mathematician who had a knack for breaking complex problems into simpler, overlapping subproblems. In the context of Gridworld, the Bellman Equation takes the current reward and the maximum expected future rewards for the neighboring states, then combines them to calculate the value of the current state. By continually updating these values, the agent learns the best policy to reach the goal.

Here’s the Bellman Equation in its simplest form:

V(s) = R(s) + γ * max[V(s')]

where:

V(s) is the value of the current state — let’s dive into it.

R(s) is the immediate reward after moving to the current state — let’s dive into it.

γ is the discount factor that determines how much importance we want to give to future rewards — let’s dive into it.

max[V(s')] is the maximum value of the neighboring states. — let’s dive into it.

🧪 Experimenting with Different Policies

One of the best things about Gridworld is that it allows us to experiment with different policies and see how they affect the agent’s performance. For instance, we could start with a random policy, where the agent chooses its actions randomly. This would be like wandering aimlessly in a maze, hoping to stumble upon the exit. As you can guess, this isn’t a very efficient way to solve the problem. A better approach might be a greedy policy, where the agent always chooses the action that brings the most immediate reward. 🔍 Interestingly, like always taking the path that looks the most promising, without considering the longer-term consequences. However, the best policy is often a balance between exploration (trying out new actions) and exploitation (sticking with the actions that have worked well in the past). 🔍 Interestingly, the ε-greedy policy, where the agent mostly takes the action that it thinks will bring the highest reward, but occasionally tries a random action.

🧭 Conclusion

And there you have it—a journey through the fascinating world of reinforcement learning, guided by the Gridworld problem. We’ve covered the basics of RL, delved into the nitty-gritty of the Gridworld problem, learned about the Bellman Equation, and experimented with different policies. Just like in our Gridworld, the field of reinforcement learning is vast, with many paths to explore. But don’t worry, even if you feel like you’re lost in a labyrinth now, remember that every great adventurer starts somewhere. With perseverance, curiosity, and a bit of guidance from problems like Gridworld, you’re well on your way to mastering the art of reinforcement learning.

So keep exploring, keep learning, and remember—the only way out is through!

🚀 Curious about the future? Stick around for more discoveries ahead!

🔗 Related Articles

- Introduction to Supervised Learning ?What is supervised learning?Difference between supervised and unsupervised learning, Types: Classification vs Regression,Real-world examples

- Difference Between Supervised, Unsupervised, and Reinforcement Learning

- Exploration vs Exploitation in Reinforcement Learning Agents