📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Can a machine learn something new without any prior training? Dive into the cutting-edge world of zero-shot, one-shot, and few-shot prompting, where artificial intelligence is pushed to its limits.”

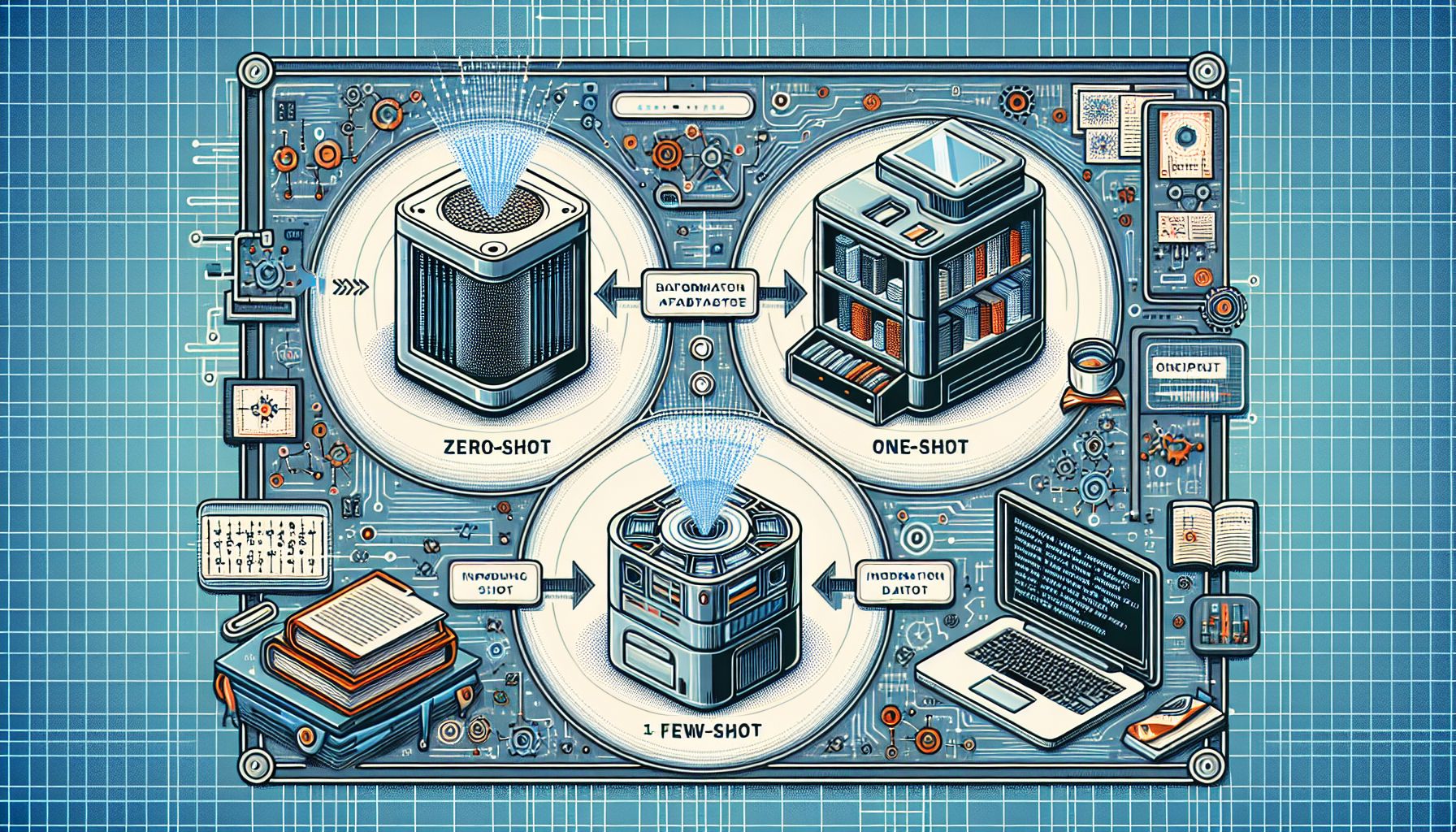

Ever marvelled at the sheer wizardry of how an AI model can generate human-like text or recognize a face after just one or two examples? Welcome to the magical world of zero-shot, one-shot, and few-shot learning! 🧙♂️ These techniques have revolutionized how AI models learn, making them more efficient and adaptable. Instead of needing thousands, or even millions, of examples, these models can perform tasks with minimal examples or even without any prior example at all. Sounds like a fairytale? Let’s dive in and explore this captivating realm of machine learning.

🎯 Zero-Shot Learning: The Clairvoyant Conjurer

"Venturing into the World of Prompting Techniques"

Imagine a wizard who can predict things without any prior knowledge. This marvellous feat is what zero-shot learning models achieve. In zero-shot learning, an AI model is tasked with solving a problem it has never seen before. No previous examples, no training data, nothing. The model is as clueless as a muggle at a wizard’s convention. Yet, with the right prompting, magic happens. The model makes accurate predictions based solely on its understanding of the problem’s structure. This understanding is derived from the model’s pre-training on a large amount of data, often a vast corpus of text for natural language processing (NLP) models. For instance, an NLP model like GPT-3 can write an essay on “The Impacts of Climate Change” even if it’s not explicitly trained on climate change topics. The model achieves this by leveraging its knowledge about language and the world gained during pre-training. 🔮 Tip: While zero-shot learning can be magical, it’s not always the best choice. The model can sometimes veer off-topic or make incorrect assumptions, as it lacks specific training on the task at hand.

🎈 One-Shot Learning: The Single Arrow Archer

Next on the line is one-shot learning, the archer who hits the bullseye with just a single arrow. In one-shot learning, the AI model is given a single example to learn from. Based on this one example, the model has to generalize and figure out how to perform the task. One classic use case of one-shot learning is in face recognition. Given a single photo, the model should be able to recognize that same face in other photos. It’s a bit like recognizing your friend in a crowd after seeing their photo just once. One-shot learning is like a quick-draw contest in the Wild West of AI. The model has to learn fast and accurately from minimal data. 🔍 Interestingly, quite a challenge, as the model has to deal with a high risk of overfitting, where it becomes too specialized on the single example and fails to generalize well. 🎯 Tip: To improve one-shot learning performance, use techniques like data augmentation (e.g., rotating or cropping the image) to create variations of the single example. This helps the model generalize better.

🎱 Few-Shot Learning: The Miniature Maestro

Finally, we have few-shot learning, the maestro who can conduct an orchestra after just a few rehearsals. In few-shot learning, the AI model is given a few (typically less than ten) examples to learn from. Few-shot learning strikes a balance between zero-shot and one-shot learning. It provides the model with just enough examples to understand the task, but not so many that the model becomes overly specialized. For instance, a few-shot learning model can learn to classify different breeds of dogs after being shown just a few images of each breed. The model learns the unique features of each breed from these few examples and uses this knowledge to classify unseen images correctly. 🎲 Tip: Few-shot learning often benefits from transfer learning, where a model pre-trained on a large dataset is fine-tuned with a few examples. This approach leverages the broad knowledge of the pre-trained model while adapting it to the specific task.

💡 Prompting: The Secret Sauce

An essential ingredient in all these techniques is prompting. A prompt is a cue or instruction that guides the model in performing the task. In the context of NLP models, a prompt can be an introductory sentence or a question. For zero-shot learning, the prompt is often a detailed description of what the task involves. For one-shot and few-shot learning, the prompt can include one or more examples related to the task. Crafting effective prompts is an art 🎨. A good prompt can significantly boost the model’s performance, while a poor one can lead it astray. Here are some tips for effective prompting:

Be clear and explicit

The model should understand what’s expected without any ambiguity.

Use examples

Especially for one-shot and few-shot learning, including examples in the prompt can guide the model.

Experiment

Try different prompts and see what works best. Like a chef fine-tuning a recipe, prompt crafting often involves a bit of trial and error.

🧭 Conclusion

The realm of zero-shot, one-shot, and few-shot learning is an exciting frontier in AI. These techniques enable models to learn efficiently with minimal examples, bringing us closer to the dream of machines that can learn like humans. Remember, these are not one-size-fits-all solutions. The choice between zero-shot, one-shot, and few-shot learning depends on the task, the available data, and the specific requirements. And the secret ingredient, the prompt, can make a world of difference, so craft it with care. So, wizards and witches of AI, are you ready to cast your spells with the power of few? The magic awaits! 🧙♀️🧙♂️

🤖 Stay tuned as we decode the future of innovation!