📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Did you know the magic of translating an entire document into a different language lies in a complex dance between two AI models called encoders and decoders? Let’s dive into the fascinating intricacies of this architecture powering language models!”

Are you intrigued by the way Google Translate works? Or how Siri, Alexa, or other voice assistants understand and respond to your queries? If yes, then you’re at the right place! The magic behind these functionalities is largely due to a computational model known as the Encoder-Decoder architecture. These models are a fundamental part of language models used in Natural Language Processing (NLP). In this blog post, we’ll take a deep dive into understanding the Encoder-Decoder architecture, how it works, its applications, and finally, its limitations and possible solutions. Oh, and don’t worry if you’re not a tech geek. We promise to keep things as simple and fun as possible.

So, put on your thinking caps, grab a cup of coffee ☕️, and let’s get started!

"Unraveling the DNA of Language Models"

🧬 The Basics: What is Encoder-Decoder Architecture?

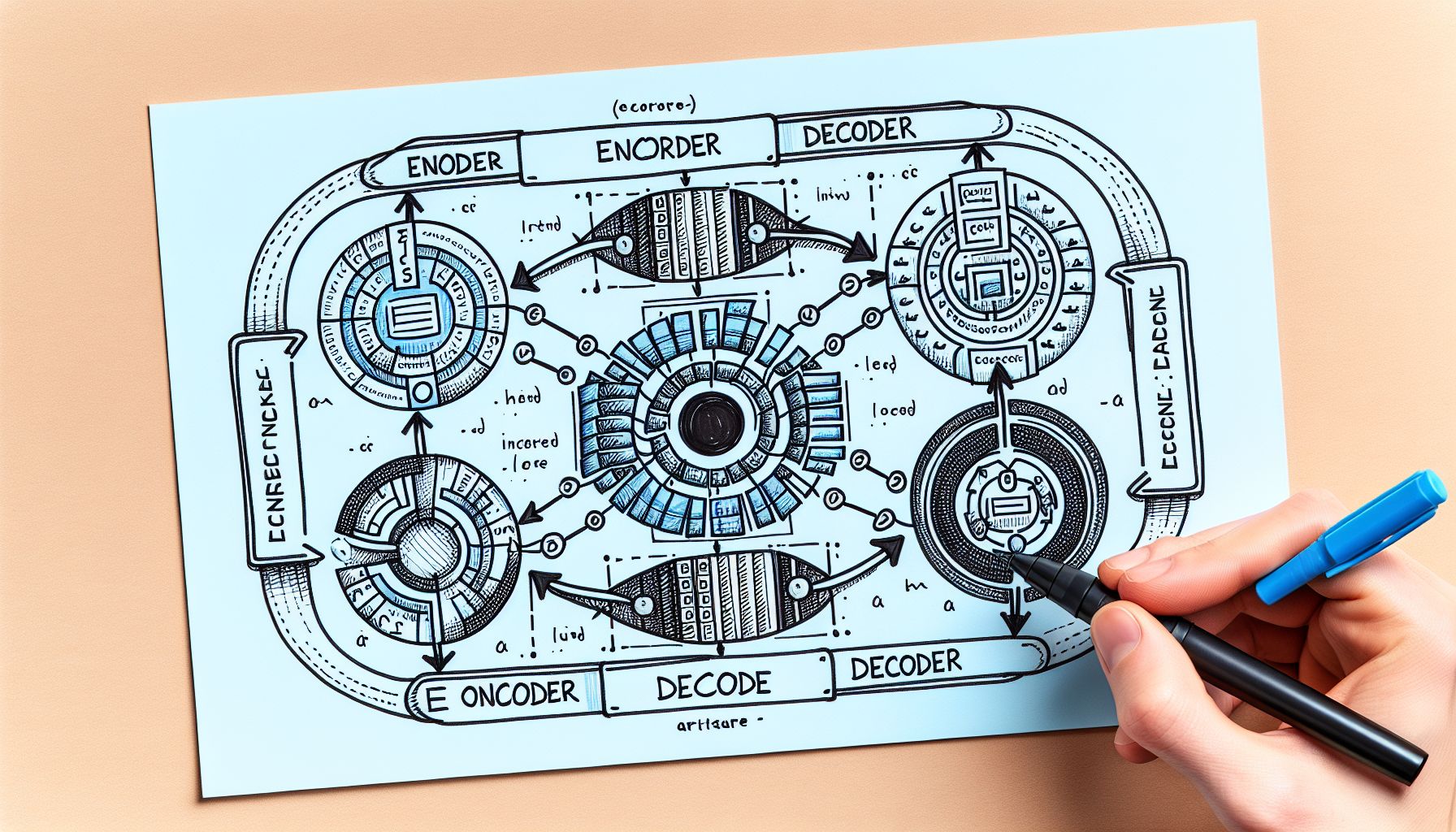

Imagine you’re an interpreter in a United Nations meeting. You have to listen to a speaker, understand their message, and then translate it into another language. The Encoder-Decoder architecture does something similar. In the world of NLP, the Encoder-Decoder architecture is a neural network model used for converting sequences from one domain (like sentences in English) into sequences in another domain (like the same sentences translated into French).

This architecture is composed of two main parts:

- Encoder: This part of the model reads and interprets the input data. It’s like the part of your brain that listens and understands the speaker’s language at the UN meeting. The encoder processes the input data and converts it into a context vector, also known as a thought vector. This vector is a dense representation of the input data that carries the essential information needed for the decoder part.

- Decoder: The decoder is the part of the model that generates the output data. It’s like the part of your brain that translates the speaker’s message into another language. The decoder takes the context vector produced by the encoder, processes it, and generates the output sequence.

🎯 How Does It Work: The Inner Workings of Encoder-Decoder Architecture

Now that we’ve covered the basics, let’s delve a little deeper into how the Encoder-Decoder model actually works.

Encoding the Inputs

Let’s say our task is to translate an English sentence into French. The encoder starts by taking one word of the English sentence at a time. Each word is represented as a vector using a technique called word embedding. The encoder processes the input sequence in a step-by-step manner. For each step, it takes the current word’s vector and some hidden state from the previous step as inputs, and it produces a new hidden state. This hidden state is used to compute the context vector.

Decoding to Outputs

Once the encoder has processed the entire input sequence, we start with the decoding process. The decoder also works in a step-by-step manner, but its task is to generate the output sequence. The decoder takes the context vector and a special start-of-sequence symbol as the initial inputs. For each step, it takes the current hidden state and the last generated word as inputs, and it produces a new hidden state. This hidden state is used to compute a probability distribution over possible next words in the output sequence. The word with the highest probability is selected and added to the output sequence. This process continues until the decoder generates an end-of-sequence symbol, or until the output sequence reaches a maximum length.

🛠️ Behind the Scenes: LSTM & GRU Cells

Under the hood, the Encoder-Decoder architecture uses a special type of neural network called Recurrent Neural Networks (RNNs). These networks are great for processing sequences because they have a kind of memory. They can remember information from previous steps and use it in the current step. However, standard RNNs have a major problem: they struggle to remember information over long sequences. 🔍 Interestingly, known as the vanishing gradient problem. To overcome this issue, more advanced types of RNNs are used in Encoder-Decoder models: Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) cells. These cells have a complex architecture with gates and states that allow them to remember and forget information in a controlled manner.

🚀 Applications: Where is Encoder-Decoder Architecture Used?

The Encoder-Decoder architecture is used in a variety of NLP tasks, including:

- Machine Translation: 🔍 Interestingly, probably the most famous application. Services like Google Translate use an Encoder-Decoder model to translate text from one language to another.

- Speech Recognition: Voice assistants like Siri and Alexa use Encoder-Decoder models to understand and respond to user queries.

- Text Summarization: These models can be used to generate a short summary of a long text.

- Chatbots: Chatbots use Encoder-Decoder models to generate responses to user inputs.

🧩 Limitations and Future Directions

While the Encoder-Decoder architecture has proven to be incredibly useful, it’s not without its limitations. One significant limitation is that the model relies on a single context vector to capture the entire input sequence’s meaning. If the input sequence is very long, some information may be lost. To address this problem, researchers have developed Attention Mechanisms. These mechanisms allow the model to focus on different parts of the input sequence at each step of the decoding process, instead of relying on a single context vector. Another promising direction is the use of Transformers. These models dispense with recurrence and instead use several attention mechanisms to capture the relationships between all words in the input sequence simultaneously.

🧭 Conclusion

The Encoder-Decoder architecture in language models has revolutionized the field of Natural Language Processing. It’s behind many of the language technologies we use and love today. Understanding this architecture will give you a solid foundation for diving deeper into the exciting world of NLP. While the Encoder-Decoder architecture is not without its limitations, the advancements in attention mechanisms and transformer models are pushing the boundaries and opening up new possibilities. As we continue to explore and innovate, who knows what the future holds?

So, keep exploring, keep learning, and most importantly, have fun along the way! 🚀

🌐 Thanks for reading — more tech trends coming soon!