June 08, 2025

⚡ “Dive into the thrilling world where software agents navigate complex environments, seeking treasures of rewards. It’s not sci-fi—it’s the core concepts of AI learning and they’re transforming the world!”

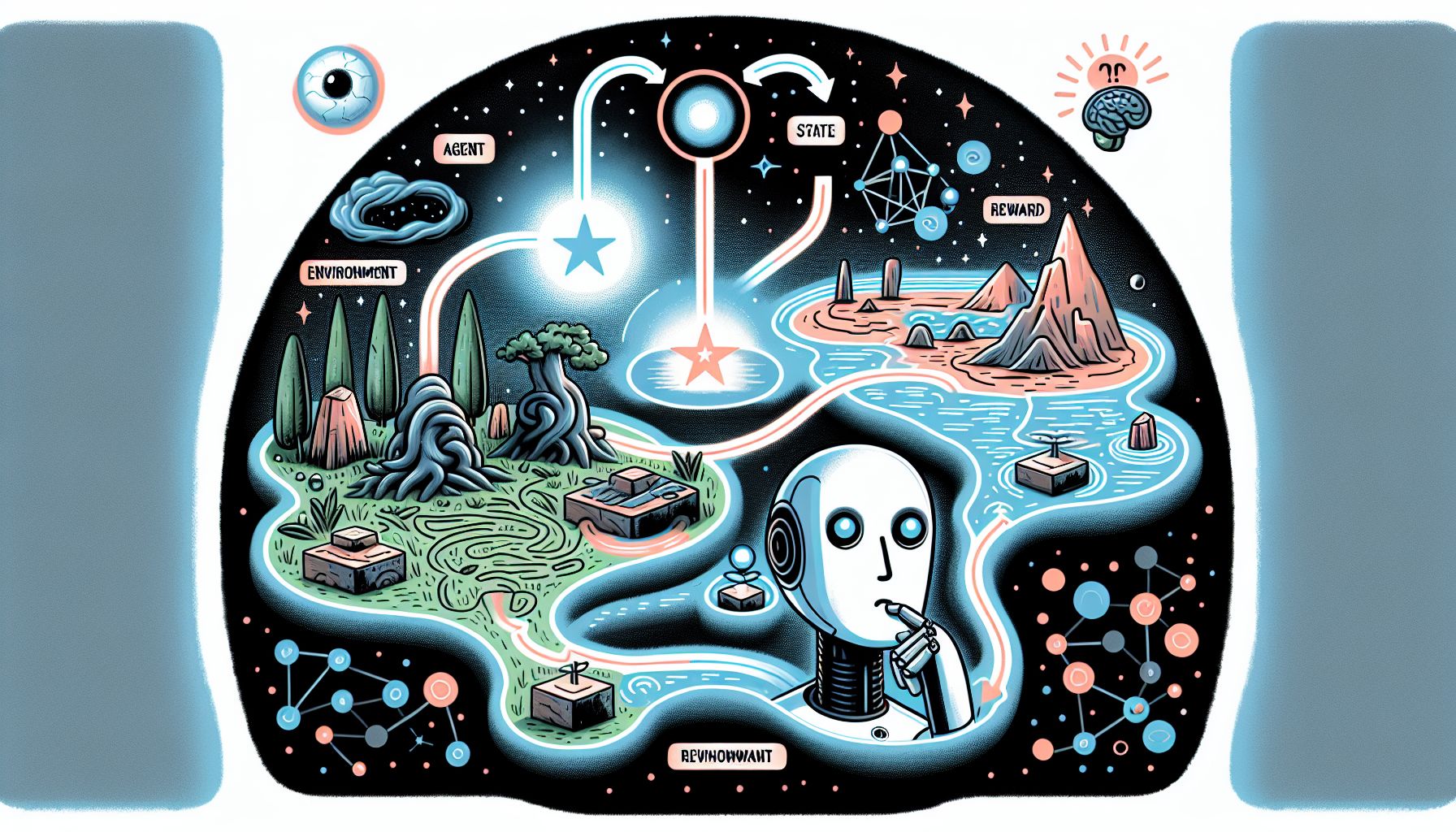

Hello fellow data enthusiasts! 🎉 Today, we’re going on an exciting journey into the fascinating world of reinforcement learning. We’ll be exploring some of its core concepts: Agent, Environment, Reward, State, and Action. Just like a child learns to walk, reinforcement learning is all about learning by interacting with an environment. It’s a type of machine learning where an agent learns to behave in an environment, by performing certain actions and observing the results. But before we get ahead of ourselves, let’s break down these terms one after another. Grab a cup of coffee ☕️, sit tight, and let’s dive in!

🕹️ Agent: The Learner and Decision Maker

"Unraveling the Puzzle of AI Learning Process"

In the world of reinforcement learning, the agent is the learner or the decision-maker. It’s like the character you control in a video game. The agent makes decisions by choosing actions. For example, in a chess game, the agent decides whether to move the pawn, knight, or bishop. The agent’s objective is to learn the best strategy, defined as a sequence of actions, that earns it the most rewards over time. This strategy, known as a policy, guides the agent’s actions based on the current state of the environment. Imagine you’re playing a game of Super Mario. You (the agent) decide to move right, jump, or run based on what you see on the screen (the state of the environment). Your policy might be something like “if there’s a pit ahead, jump”.

🌏 Environment: The World Around the Agent

The environment is everything that the agent interacts with. It’s the world in which the agent operates. Going back to our video game analogy, the environment would be the entire game layout. The environment takes the agent’s current state and action as input, and returns as output the agent’s reward and its next state. In essence, the environment decides the rules of the game. Let’s say in our Super Mario game, you decide to make Mario jump over a pit. The environment will then process this action. If Mario successfully jumps over the pit, the environment might return a reward (some points) and the next state (Mario safe on the other side of the pit).

🎁 Reward: The Feedback from Environment

In reinforcement learning, the reward is the feedback that the agent gets from the environment after taking an action. It’s the carrot on the stick 🥕 that motivates the agent’s behavior. Rewards can be positive (gaining points for collecting a power-up) or negative (losing a life for falling into a pit). The agent’s goal is to maximize the sum of rewards it gets over time. Think of it like this: the rewards are the game score in Super Mario. You want to collect as many coins (positive rewards) as possible, while avoiding enemies (negative rewards).

🧭 State: The Situation at Hand

The state is the current situation returned by the environment. It’s the agent’s complete perception of the environment at a given time. In a video game, this could be the current layout of the game that the player sees. In more technical terms, a state is a formal description that includes everything necessary to determine what happens next. Essentially, it’s the context required for the agent to decide the next action. For instance, in a game of chess, the state would be the positions of all the pieces on the board. This information is used by the agent (player) to decide its next move.

⚡ Action: The Choices Made by Agent

As for Actions, they’re the choices made by the agent. As for They, they’re the different steps the agent can take in a given state. For example, in a game of tic-tac-toe, an action would be the agent (player) placing their mark in an empty square. In each state, the agent selects an action based on its policy. The selected action is then sent to the environment, which in response returns the next state and reward. In our Super Mario game, actions could include things like “move left”, “move right”, “jump”, etc. The better the actions you choose, the higher the reward you’ll likely receive from the environment.

🧭 Conclusion

And there we have it! You’ve just navigated the core concepts of reinforcement learning: Agent, Environment, Reward, State, and Action. With these concepts, you now hold the keys to understanding this intriguing field of machine learning. 🎓 Remember, the agent is our learner that interacts with the environment. It makes decisions based on the state of the environment, and these decisions are the actions it takes. The feedback it gets from its actions is the reward. The ultimate goal of the agent is to maximize the sum of these rewards over time. This was just the tip of the iceberg. Reinforcement learning is a vast field with many more exciting concepts and techniques to explore. So, keep learning, keep exploring, and most importantly, have fun while you’re at it! 🚀

Curious about the future? Stick around for more! 🚀

🔗 Related Articles

- “Virtual Reality and Augmented Reality: Transforming Our Perception of the World”

- “Exploring Virtual Reality: The Future of Online Learning and Gaming”

- Decision Trees and Random Forests, How decision trees work (Gini, Entropy), Pros and cons of trees, Ensemble learning with Random Forest, Hands-on with scikit-learn