📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Dive into the brilliant world of TRPO, where ordinary Policy Optimization meets exotic KL-Divergence Constraints. This ain’t your grandpa’s machine learning!”

Are you on a quest to decode the marvels of reinforcement learning algorithms? Have you encountered the term TRPO and wondered what it stands for? Or perhaps you’re intrigued by how KL-Divergence constraints come into play? If so, you’ve landed in the right place! 🚀 In this post, we’ll journey together through the depths of Trust Region Policy Optimization (TRPO) with KL-Divergence constraints. By the end of our exploration, you’ll not only understand what TRPO is, but also grasp how to navigate the seemingly complex terrain of KL-Divergence constraints with ease. So buckle up, it’s time for a thrilling ride into the world of advanced reinforcement learning!

🔍 Setting the Stage: Understanding Policy Optimization

"Mastering TRPO with the Power of KL-Divergence Constraints"

Before we dive head-first into TRPO, it’s crucial to get a firm grasp of what policy optimization is all about. In reinforcement learning, policy optimization is the process of refining the agent’s actions in a way that maximizes the cumulative reward. You can think of it as training a puppy to perform tricks 🐶. The more treats (rewards) the puppy gets, the better it becomes at performing the tricks (policy). Traditional policy optimization methods, such as Proximal Policy Optimization (PPO), work by making small improvements to the current policy based on the gradient of the performance function. However, they often suffer from instabilities and poor performance due to large, uncontrolled policy updates. Enter TRPO, a policy optimization method that guarantees monotonic improvement by using a trust region approach. This ensures our policy updates don’t stray too far from the current policy, avoiding drastic, potentially harmful changes. It’s like keeping our puppy on a leash while training, to prevent it from running off in a potentially dangerous direction.

🎯 Trust Region Policy Optimization (TRPO): A Closer Look

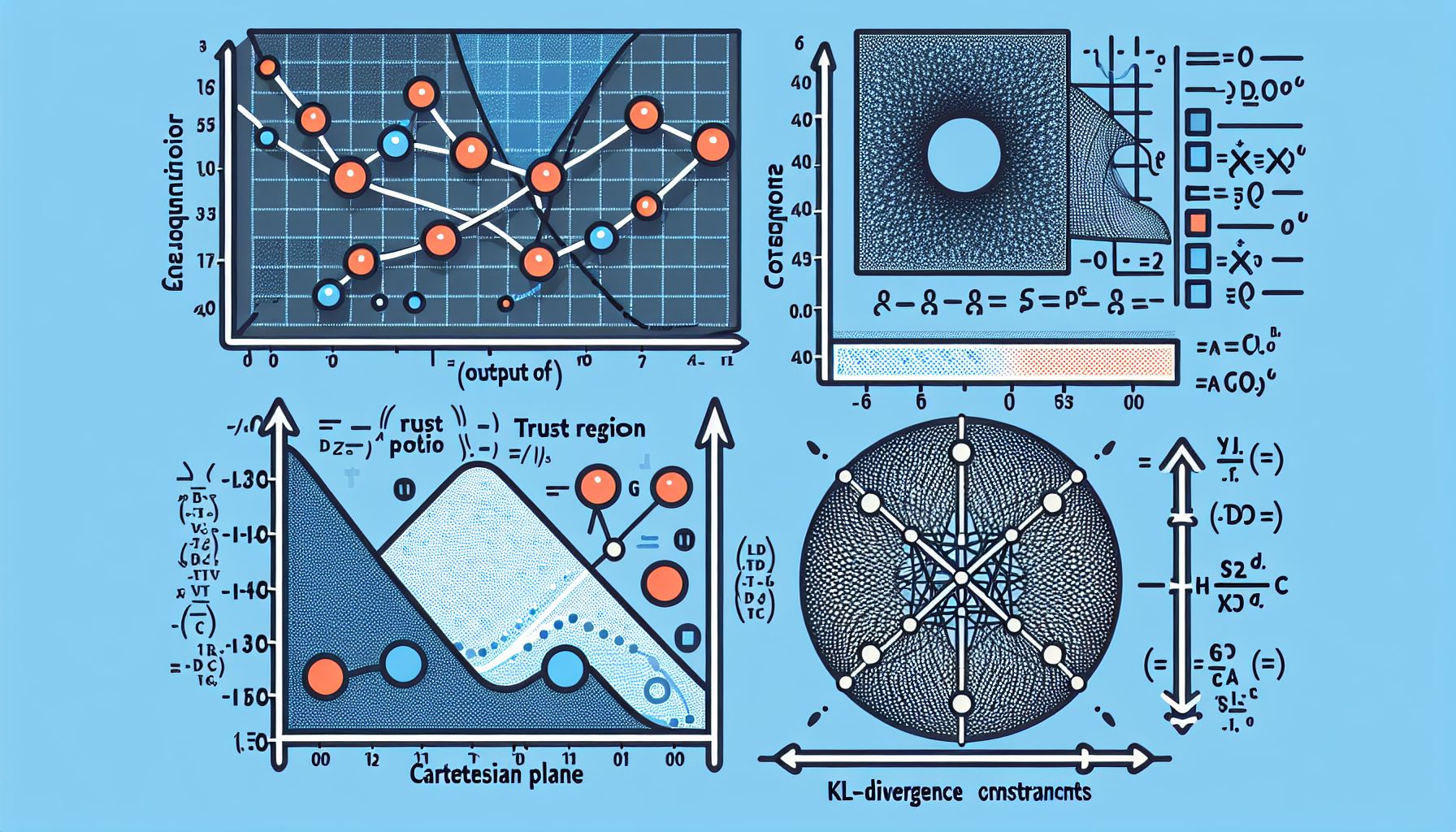

The crux of TRPO lies in its ability to define a boundary, or a ‘trust region’, within which it allows policy updates. It’s like setting a playground for our puppy to play and learn, but not allowing it to go beyond the fence. To ensure the new policy doesn’t stray too far from the old one, TRPO uses Kullback-Leibler (KL) divergence as a measure of the distance between the old and new policy. The KL divergence quantifies how much one probability distribution diverges from another, providing a measure of ‘safety’ for our policy update. Here’s the cool part: TRPO formulates the policy optimization problem as a constrained optimization problem, where the objective is to maximize the expected return, subject to a constraint on the KL-divergence between the old and new policy. In mathematical terms:

### Maximize: E[π(a|s) / π_old(a|s) * A^π_old(s, a)]

### Subject to: E[KL[π_old(·|s), π(·|s)]] <= δ

Here, π(a|s) is the new policy, π_old(a|s) is the old policy, and A^π_old(s, a) is the advantage function under the old policy. The constraint ensures the KL-divergence between the new and old policy is less than a small value δ. This ensures our new policy doesn’t stray too far from the old one.

📐 KL-Divergence: A Safety Net for Policy Updates

Think of KL-Divergence as a safety net or a leash for our policy puppy. It ensures our policy updates are safe and controlled, and don’t run wild.

KL-Divergence, named after Solomon Kullback and Richard Leibler, is a measure of how one probability distribution diverges from a second, expected probability distribution. In the context of TRPO, it measures how much our new policy, π(a|s), diverges from the old policy, π_old(a|s).

By constraining the KL-Divergence, TRPO ensures the updates to the policy are always within a safe region, leading to stable and efficient learning.

🛠 Implementing TRPO: Practical Tips

Now that we’ve understood the theoretical foundations of TRPO, let’s explore some practical tips for implementing it:

Select a Suitable Trust Region Size

The size of the trust region, determined by δ, plays a crucial role in TRPO’s performance. A too small value might result in slow learning, while a too large value might make the learning unstable. It’s like setting the right length for our puppy’s leash.

Use a Good Optimization Algorithm

TRPO uses a second-order optimization algorithm, such as Conjugate Gradient, to solve the constrained optimization problem. Make sure to use a good implementation of such an algorithm for best results.

Experiment with Different Baselines

The choice of the baseline, used to reduce variance in policy gradient estimates, can significantly affect TRPO’s performance. Try different baselines, such as value function or advantage function estimates, to see what works best for your problem.

🧭 Conclusion

Just like a reliable guide in a complex maze, Trust Region Policy Optimization (TRPO) with KL-Divergence constraints offers a steadfast approach to policy optimization in reinforcement learning. By ensuring our policy updates remain within a ‘trust region’, TRPO ensures stable and efficient learning, much like keeping our training puppy on a leash. Remember, the key to mastering TRPO lies in understanding its underlying principles and experimenting with its components. So, don’t be afraid to play around with the trust region size, the optimization algorithm, and the baseline. Happy learning! 🚀

🌐 Thanks for reading — more tech trends coming soon!