📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Ever wondered how Facebook accurately tags your photos? Enter the world of vision-language models, the unsung heroes behind image captioning systems!”

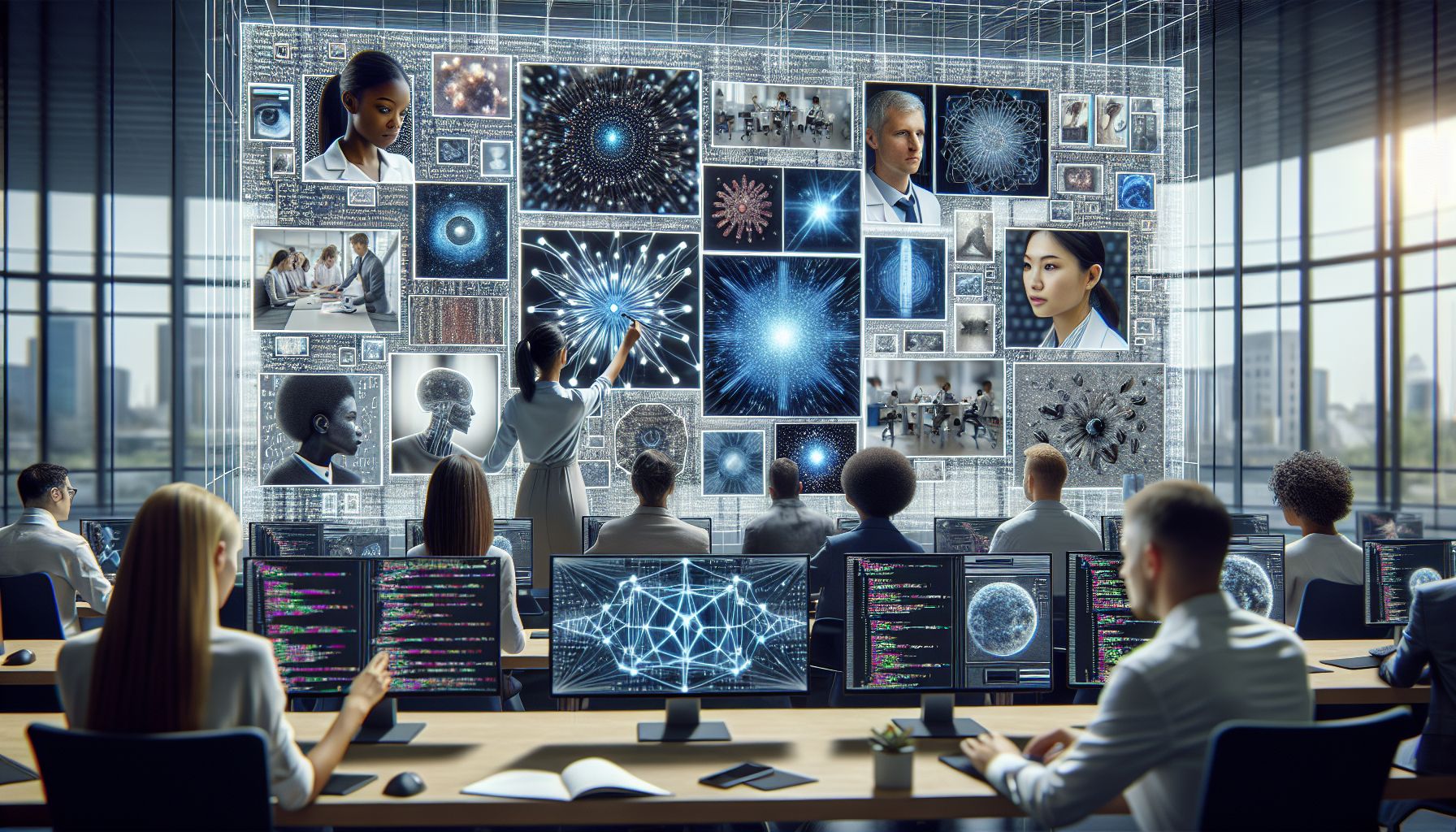

Greetings, tech enthusiasts! Ever wondered how Facebook describes your uploaded images to visually impaired users? Or how Google Photos search engine retrieves images based on textual descriptions? The secret sauce behind these fascinating technologies is an area of AI called Image Captioning. Today, we’ll be diving deep into the world of Image Captioning Systems and exploring how we can build one using Vision-Language Models. 🚀 In the simplest terms, image captioning is the process of generating textual descriptions for an image. This involves complex understanding and interpretation of the visual content, backed by the ability to express that understanding in natural language. The amalgamation of Computer Vision and Natural Language Processing (NLP) technologies makes this magic happen. In this post, we’ll be exploring the key components of an image captioning system and how to build one using Vision-Language Models. Whether you’re an AI enthusiast, a student, a researcher, or a developer, this guide will walk you through the fundamentals as well as the intricacies of these captivating technologies.

🎯 Understanding Image Captioning Systems

"Creating Visual Narratives with Vision-Language Models"

Image captioning systems are essentially a combination of two main components: a visual model (vision) and a language model. The visual model is responsible for understanding the image content, while the language model generates a coherent and contextually relevant description of the image. The visual model usually involves Convolutional Neural Networks (CNNs), responsible for extracting visual features from an image. On the other hand, the language model often involves Recurrent Neural Networks (RNNs), which take the extracted features as input and generate a sequence of words, forming a meaningful caption.

🧩 Decoding Vision-Language Models

Vision-Language Models, as the name suggests, are models that can understand and generate content related to both images (vision) and text (language). These models are pre-trained on large-scale datasets and can be fine-tuned for a specific task like image captioning. Some popular vision-language models include OpenAI’s CLIP, Google’s ViT (Vision Transformer), and Facebook’s M2M-100.

Here is a basic code snippet showing how to use the CLIP model for feature extraction:

import torch

from CLIP import clip

device = "cuda" if torch.cuda.is_available() else "cpu"

model, transform = clip.load('ViT-B/32', device)

image = transform(Image.open("image.jpg")).unsqueeze(0).to(device)

with torch.no_grad():

image_features = model.encode_image(image)

These models are trained to understand the relationship between image content and their corresponding textual descriptions. During the fine-tuning process for image captioning, these models learn to generate relevant captions for a given image.

⚙️ Building an Image Captioning System

Let’s dive into the main steps involved in building an image captioning system using vision-language models:

Step 1: Data Preparation

The first step involves preparing the dataset, which usually consists of images and their corresponding captions. The quality and quantity of the dataset greatly impact the model’s performance. Popular datasets used for this purpose include MSCOCO, Flickr8k, and Flickr30k.

Step 2: Feature Extraction

As mentioned earlier, the visual model is used to extract features from images. These features are a numerical representation of the image, which the model can understand and process. Vision-language models like CLIP or ViT can be used for this purpose.

Step 3: Model Training

Once we have the features, we feed them into our language model to learn the mapping between image features and corresponding captions. 🔍 Interestingly, done by training the model to predict each word in the caption given the image features and the previously generated words.

Step 4: Caption Generation

After the model has been trained, it can be used to generate captions for new images. 🔍 Interestingly, done by feeding the image features to the model and generating words one by one until a predefined end token is predicted by the model.

💡 Tips for Better Performance

Building an efficient image captioning system can be challenging, but here are a few tips to improve your model’s performance:

Data Augmentation

Techniques like rotation, scaling, flipping, and cropping can augment your dataset and help the model generalize better.

Fine-Tuning

Fine-tuning the vision-language model on your specific task can greatly improve the performance.

Regularization

Techniques like Dropout and Batch Normalization can help prevent overfitting.

Encoder-Decoder Architecture

Use an Encoder-Decoder architecture with Attention Mechanisms to allow the model to focus on specific parts of the image while generating each word in the caption.

🧭 Conclusion

Building an image captioning system is a fascinating journey through the intersection of Computer Vision and Natural Language Processing. With the advent of powerful Vision-Language Models, the task has become quite achievable yet remains challenging. But remember, every great model starts with a single line of code. So, don’t hesitate to dive in and start building. The world of AI is full of surprises and the magic of transforming images into words is just one of them. 🚀🎩

🤖 Stay tuned as we decode the future of innovation!