📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Believe it or not, your artificial intelligence model is contributing to climate change. Dive into the surprising environmental impact of large-scale data analysis.”

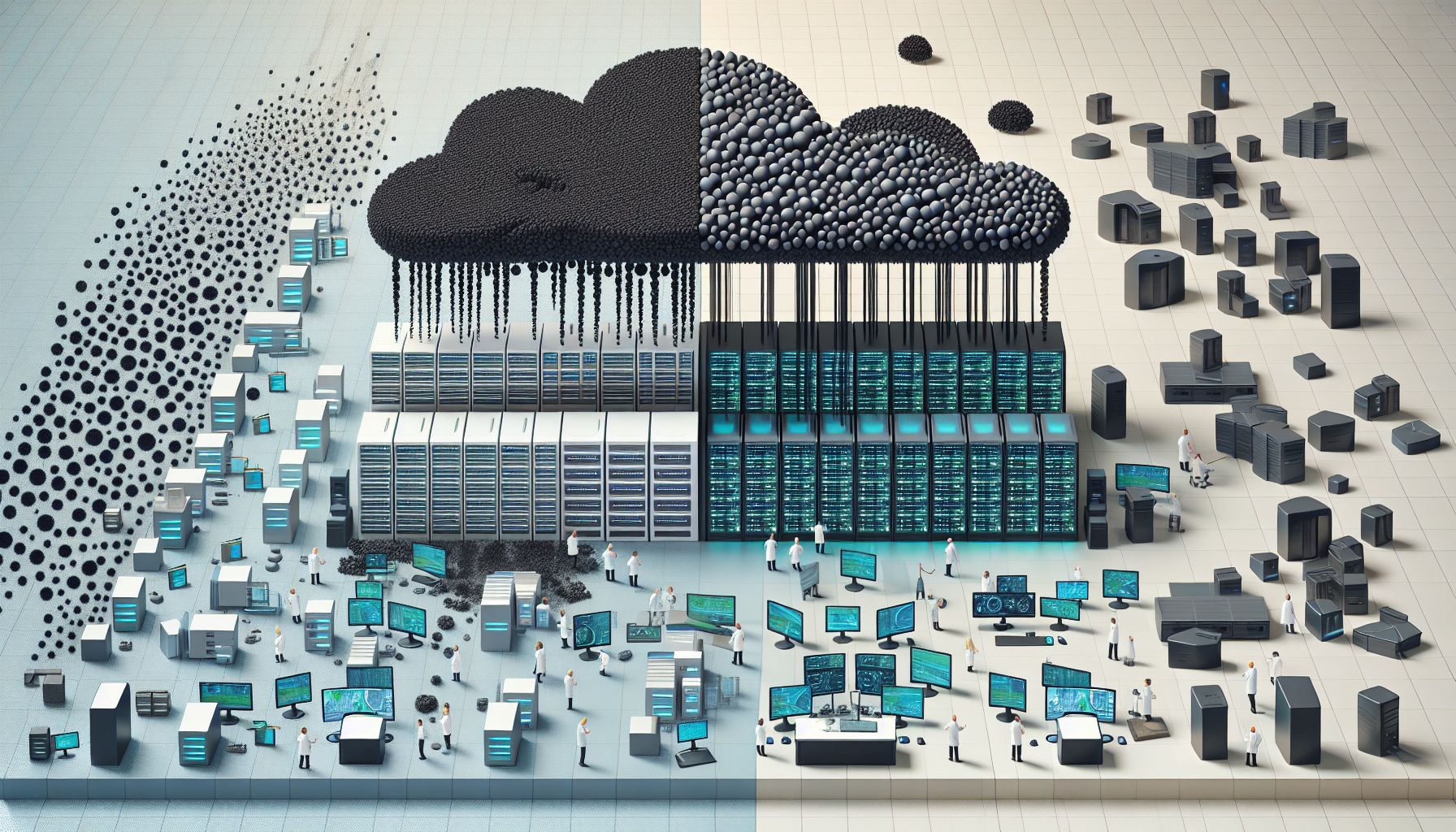

In the world where artificial intelligence (AI) and machine learning (ML) are becoming increasingly pervasive, one question that often gets overlooked is: what’s the environmental impact of these technologies? Indeed, while we marvel at the ability of large models to master complex tasks, we tend to forget about the behind-the-scenes resources required to make these marvels come to life. In this piece, we’re going to dive deep into the carbon footprint and efficiency of large models. We’ll shed light on the unseen side of AI and ML, exploring the environmental cost and the ways we can mitigate these effects.

So, buckle up for an enlightening journey into the world of AI and sustainability. 🌍💻

"Large Models: Power, Carbon Footprint Unveiled"

💡 Understanding the Carbon Footprint of Large Models

Before we delve into the specifics of large models, let’s first define what we mean by a “carbon footprint”. In the simplest terms, a carbon footprint is the total amount of greenhouse gas emissions caused by an individual, event, organization, or product, expressed as carbon dioxide equivalent.

Now, you might be wondering, how does AI contribute to this?

To train large models, we use powerful computers that consume a significant amount of electricity. This energy usage directly translates into carbon emissions, contributing to the overall carbon footprint of the AI industry. For instance, in a 2019 study, researchers at the University of Massachusetts found that training a large neural network can emit as much carbon as five cars in their lifetimes. 🚗💨

The Intensity of Training Large Models

Training large models is not a one-off event. These models learn by iterating over a large dataset multiple times, tweaking their internal parameters to reduce errors. This process, known as epoch training, can consume considerable computational power and energy. To put it in perspective, let’s consider GPT-3, an advanced AI model by OpenAI. It has 175 billion parameters and was trained on hundreds of gigabytes of text data. It’s not hard to imagine the massive amount of energy required to train such a behemoth.

🚀 Optimizing the Efficiency of Large Models

While it’s true that large models consume a considerable amount of energy, it doesn’t mean we should halt their development. What we need is a balance – a way to continue advancing AI technology while minimizing its environmental impact. And that’s where optimization comes into play.

Pruning: The Art of Slimming Models

🧠 Think of Pruning as a technique used to reduce the size of a model by removing its least important parameters. This results in a leaner model that performs equally well but requires less computational power to train and deploy. Consider a tree laden with fruits. Not all branches contribute equally to the yield. By pruning the less productive branches, you allow the tree to focus its resources on the fruitful ones, thereby increasing overall yield. The same principle applies to large models. 🌳

Quantization and Knowledge Distillation

Quantization is another technique used to reduce the size of models. It involves reducing the precision of the numbers used in the model, which in turn reduces the computational resources needed to handle them. Knowledge distillation, on the other hand, involves training a smaller model (student) to mimic the behavior of a larger pre-trained model (teacher). The student model learns to approximate the function learned by the teacher model, thus acquiring the knowledge without the need for extensive resources.

🌱 Towards Sustainable AI: Best Practices

While the aforementioned techniques can help optimize large models, we also need to incorporate sustainable practices in our AI development process. Here are a few key steps we can take:

Monitor Energy Usage

This includes monitoring the energy consumption of the hardware used for model training and deployment.

Efficient Data Processing

This involves optimizing data processing tasks and using efficient algorithms to minimize energy usage.

Renewable Energy Source

Whenever possible, use renewable energy sources for powering the equipment.

Consider Energy-Efficient Hardware

Choose hardware that delivers the required computational power with the least energy consumption.

Promote Transparency

Share the carbon impact of your AI projects to promote awareness and foster a culture of sustainability in the AI community.

🧭 Conclusion

AI and ML have undoubtedly revolutionized numerous sectors, offering unprecedented opportunities for growth and innovation. However, as we marvel at these advancements, it’s crucial to remember the environmental cost associated with them. Large models, while incredibly powerful, consume significant energy, leading to a considerable carbon footprint. But with the right optimization techniques and sustainable practices, we can continue to harness the power of AI while mitigating its environmental impact. The journey to sustainable AI might be challenging, but it’s a necessary one. As we strive to push the boundaries of technology, let’s ensure we do so in a way that respects and preserves our planet. After all, there’s no AI on a dead planet. 🌍🌱

📡 The future is unfolding — don’t miss what’s next!