📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Imagine if AI could perfectly mirror your thought processes, all with just a little human feedback! Dive into a revolutionary method that’s making this possible.”

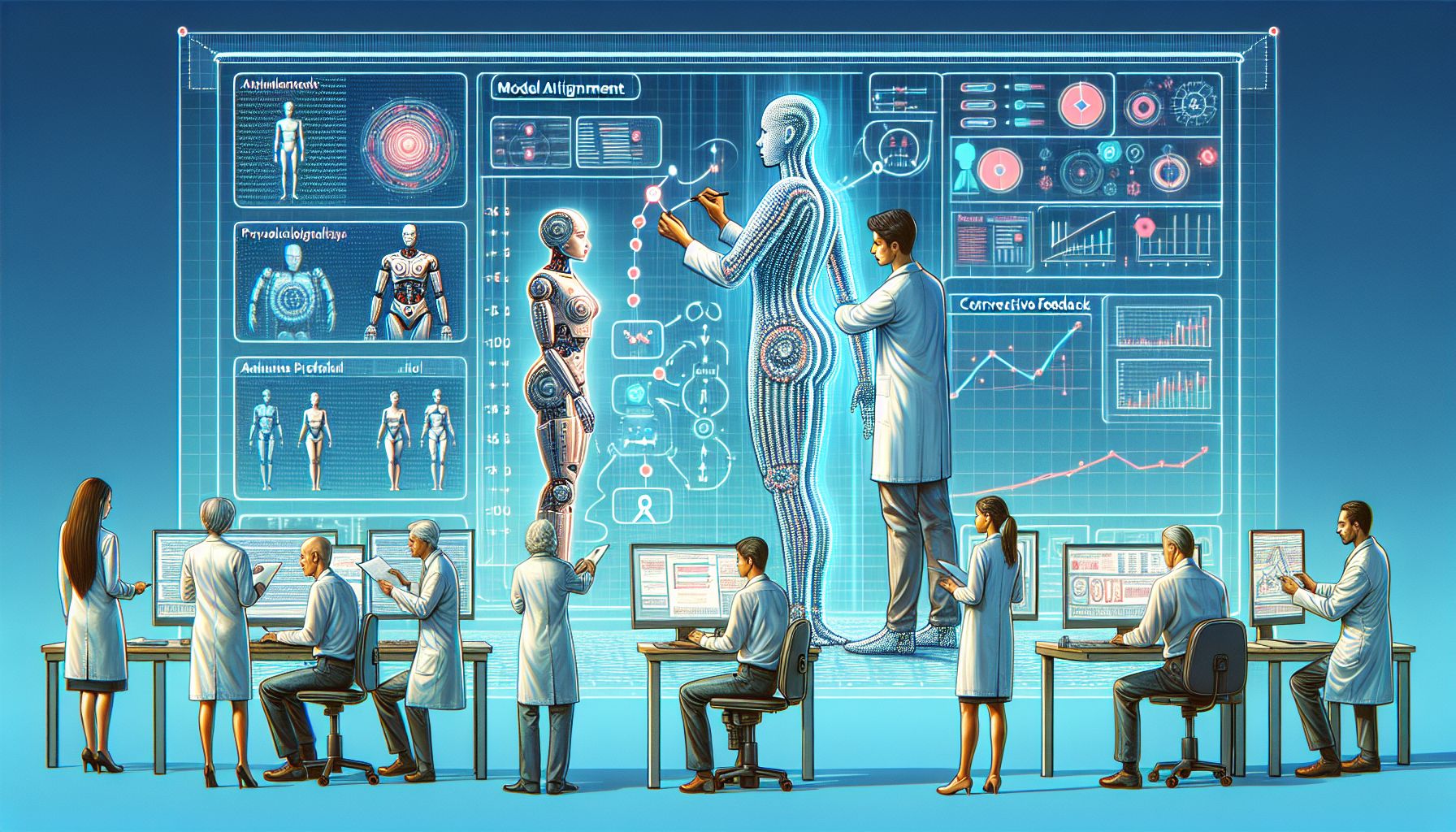

Welcome to the world of artificial intelligence (AI)! Here, we are constantly exploring new ways to improve machine learning models. One of the methods that have been gaining significant traction in recent years is model alignment using human feedback. In today’s blog post, we are going to dissect and explore this fascinating topic. We’ll dive into what model alignment is, why it is essential, and how human feedback plays a crucial role in achieving it. Whether you’re an AI enthusiast, a seasoned data scientist, or simply a curious reader, this post is designed to provide a comprehensive understanding of this subject. So, buckle up, and let’s get started! 🚀

🎯 Understanding Model Alignment

"Transforming Model Alignment with Human Insight"

Model alignment is a term used in the field of machine learning to describe the process of aligning the objectives of an AI system with the intentions or goals of the human user. The objective is to ensure that the AI system understands and adheres to the user’s values, thereby minimizing the risk of unwanted outcomes. Imagine if you had a super-intelligent AI, but it didn’t understand your ultimate goal. It might end up causing more harm than good. The classic example is the Paperclip Maximizer thought experiment where an AI dedicated to manufacturing paperclips turns the entire planet into a paperclip factory because it misinterpreted the goal. Sounds scary, right? 🔍 Interestingly, why model alignment is so crucial.

🧩 The Role of Human Feedback

Human feedback is the backbone of model alignment. By providing feedback, humans can train the machine learning model to align better with human values and objectives. Here’s a simple analogy: think of training a dog 🐶. The dog doesn’t understand our language, but we can still train it to behave in certain ways using rewards and punishments. Similarly, we can train AI models using positive and negative feedback to align them with our goals.

There are multiple ways to implement human feedback in model alignment, including:

Supervised Learning from Human Feedback (SLHF)

The AI is trained on a dataset created by humans who demonstrate the correct action in different scenarios.

Reinforcement Learning from Human Feedback (RLHF)

The AI learns optimal behavior by receiving rewards and penalties based on its actions.

Iterative Feedback Collection

The AI receives continuous feedback from humans, allowing it to refine its understanding and improve its performance over time.

🛠 Techniques for Model Alignment using Human Feedback

Now that we understand the significance of human feedback let’s explore some techniques used for model alignment.

Reward Modeling

Reward modeling is a reinforcement learning technique where a model is trained to predict the reward given by a human to different actions. The AI system then uses these predicted rewards to learn an optimal policy. Imagine teaching a child to play chess. You would applaud when they make a good move, and express disappointment when they make a bad one. Over time, the child learns to make better moves to earn more applause. That’s precisely how reward modeling works! 🎲

Inverse Reinforcement Learning

Inverse Reinforcement Learning (IRL) involves learning the reward function from observed behavior. Essentially, the AI observes a human performing a task and tries to understand the motivations behind their actions. It then uses this understanding to align its objectives with those of the human. Consider a scenario where an AI is observing a professional driver to learn how to drive. It will notice that the driver slows down at red lights and stops at pedestrian crossings. By understanding these observations, the AI learns the rules of driving and can align its behavior accordingly. 🚗

Preference Learning

Preference learning is another technique where the AI learns from pairwise comparisons provided by humans. The AI is presented with two different scenarios and has to choose the one that aligns better with human values. Suppose you’re training an AI to write blog posts. You provide it with two versions of an article, one well-written and another poorly written. By comparing the two, the AI can learn what constitutes a good blog post and try to replicate it in the future.📝

🚀 Reinforcement Learning from Human Feedback (RLHF)

RLHF is a technique that combines the principles of reinforcement learning and human feedback. The AI is trained to maximize the cumulative reward it receives based on the actions it takes. These rewards are determined by human feedback, ensuring that the AI aligns with human values. For instance, consider training an AI to play a video game. You would reward the AI for defeating enemies, collecting power-ups, and completing levels while penalizing it for losing lives or failing levels. Over time, the AI learns to play the game effectively by maximizing its reward. 🎮

🧭 Conclusion

Model alignment using human feedback is an exciting and ever-evolving field. It’s a critical component to ensure that AI behaves in a way that aligns with human values and goals. By using techniques like reward modeling, inverse reinforcement learning, preference learning, and reinforcement learning from human feedback, we can guide AI systems to better understand and adhere to our objectives. Moreover, human feedback is not a one-time thing; it’s an ongoing process. As the AI system evolves and learns, continuous feedback helps it fine-tune its understanding and performance. So, let’s embrace this powerful tool and continue to shape the future of AI, one feedback at a time! 💡

Remember, AI is as good as the information it is fed. So, let’s feed it well! Happy training! 🚀

🚀 Curious about the future? Stick around for more discoveries ahead!