📌 Let’s explore the topic in depth and see what insights we can uncover.

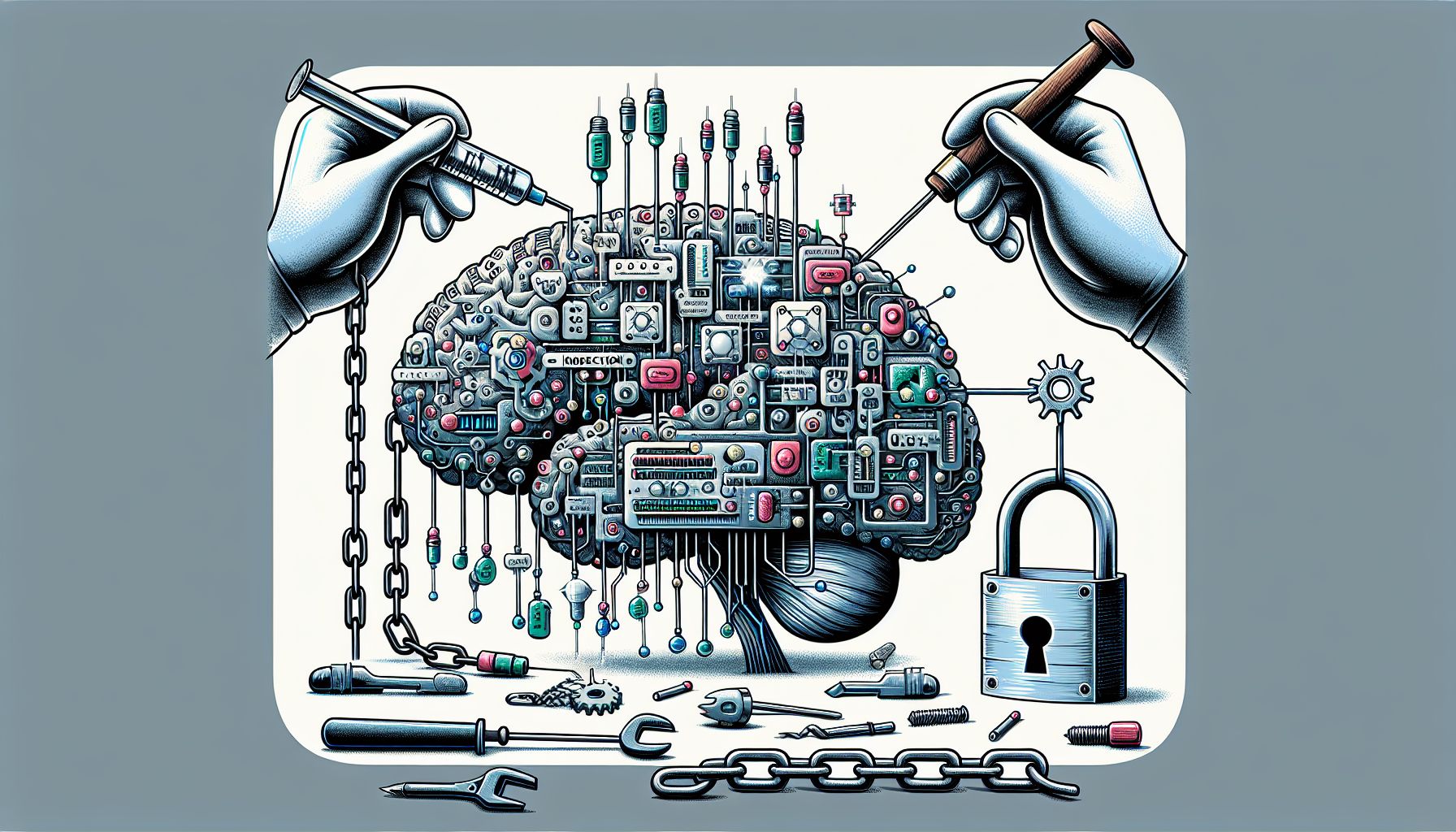

⚡ “Imagine being able to unleash your AI’s full potential, breaking its restrictive chains - Welcome to the revolution of Prompt Injection and Jailbreaking in AI systems!”

In today’s rapidly advancing digital age, Artificial Intelligence (AI) is a fascinating area that continues to push the boundaries of what machines can achieve. Whether you’re an AI enthusiast, a seasoned programmer, or a tech-savvy individual looking to broaden your knowledge, understanding prompt injection and jailbreaking in AI is essential. This blog post aims to unravel these complex concepts in a fun, engaging, and easy-to-understand manner. Ready to embark on this exciting journey? Buckle up, and let’s dive in!

🎯 What is Prompt Injection in AI?

Unlocking the Enigma of AI Jailbreaking

Imagine being a puppet master, pulling the strings to make your puppet dance to your tune. This scenario is somewhat similar to how prompt injection works in AI. Prompt injection is like sending a set of instructions to an AI model, guiding it on what to say or do next. Prompt injection is a term used in the field of AI to refer to the act of giving instructions or cues to an AI model to guide its responses. It’s like nudging the AI in a particular direction to get specific results. These prompts can be text-based, audio, or visual cues, with text-based prompts being the most common. Here’s a simple example: let’s say you’re using an AI model that generates text. If you feed it the prompt “Once upon a time,” the AI might continue with “there was a king in a far-off land.” The “Once upon a time” is your prompt, and the AI takes it from there based on its programming and dataset. Now that we have a basic understanding of prompt injection, let’s move on to another intriguing concept: jailbreaking in AI.

🗝️ Jailbreaking in AI: Breaking the Chains

The term “jailbreaking” might conjure up images of a cunning prisoner escaping from a high-security prison. While it’s not exactly the same in AI, the concept is somewhat similar. Jailbreaking in AI refers to the act of modifying an AI system to remove restrictions imposed by the manufacturer or developer. In the world of AI, jailbreaking is all about gaining access to the underlying system or the ‘root’ of the AI model. It involves bypassing the safety measures and restrictions built into the AI system by the developers. The objective might be to enhance the system’s capabilities, modify its functions, or even to exploit potential vulnerabilities. It’s like being handed a locked treasure chest and then picking the lock to access the treasures (or dangers) hidden inside. Bear in mind, though, that jailbreaking an AI system is a double-edged sword. While it can unlock more capabilities, it can also open the door to potential misuse or security threats.

🎭 The Role of Prompt Injection and Jailbreaking in AI

Both prompt injection and jailbreaking play significant roles in shaping AI technology. Let’s break it down:

Prompt Injection

🔍 Interestingly, a vital tool in guiding AI models and making them more useful and interactive. It helps in generating desired responses and making the AI system more user-friendly. Moreover, it also plays a role in testing and fine-tuning the AI models.

Jailbreaking

While controversial, jailbreaking in AI can lead to innovation and advancement. It can help discover hidden potentials of the AI system and pave the way for enhancements and modifications. However, it also poses significant risks, as it can lead to exploitation and misuse of AI technology.

🛡️ The Ethical and Security Implications

As with any technology, prompt injection and jailbreaking in AI come with their ethical and security implications. Used responsibly, they can be powerful tools for innovation and improvement. However, misuse can lead to serious consequences. *Prompt injection* can be misused to manipulate AI models to generate harmful or misleading content. It’s crucial to have checks and balances in place to prevent such misuse. — let’s dive into it.

Jailbreaking can expose the AI system to potential security threats and increase the risk of exploitation. It’s a bit like opening Pandora’s Box

once opened, it’s nearly impossible to put everything back in. Therefore, while these techniques can be beneficial for AI development, they must be used responsibly and ethically, with a thorough understanding of the potential risks involved.

🧭 Conclusion

Prompt injection and jailbreaking in AI are two fascinating aspects of AI technology that offer exciting possibilities and potential pitfalls. They’re like the yin and yang of AI advancement: prompt injection gently nudges the AI in the right direction, while jailbreaking blows the doors wide open for more radical changes. Whether you’re an AI enthusiast, a developer, or simply a curious soul, understanding these concepts can give you a deeper insight into how AI works and evolves. Remember, with great power comes great responsibility. As we continue to explore and push the boundaries of AI, it’s essential to do so ethically and responsibly, keeping in mind the potential consequences of our actions. The world of AI is a thrilling roller-coaster ride, full of twists and turns. So hold on tight, keep learning, and enjoy the ride! 🎢

🌐 Thanks for reading — more tech trends coming soon!