📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Imagine a world where the photos you see are not real, and the information you receive is a figment of a machine’s imagination. Welcome to the chilling reality of AI hallucination and misinformation - and you’re living in the epicenter.”

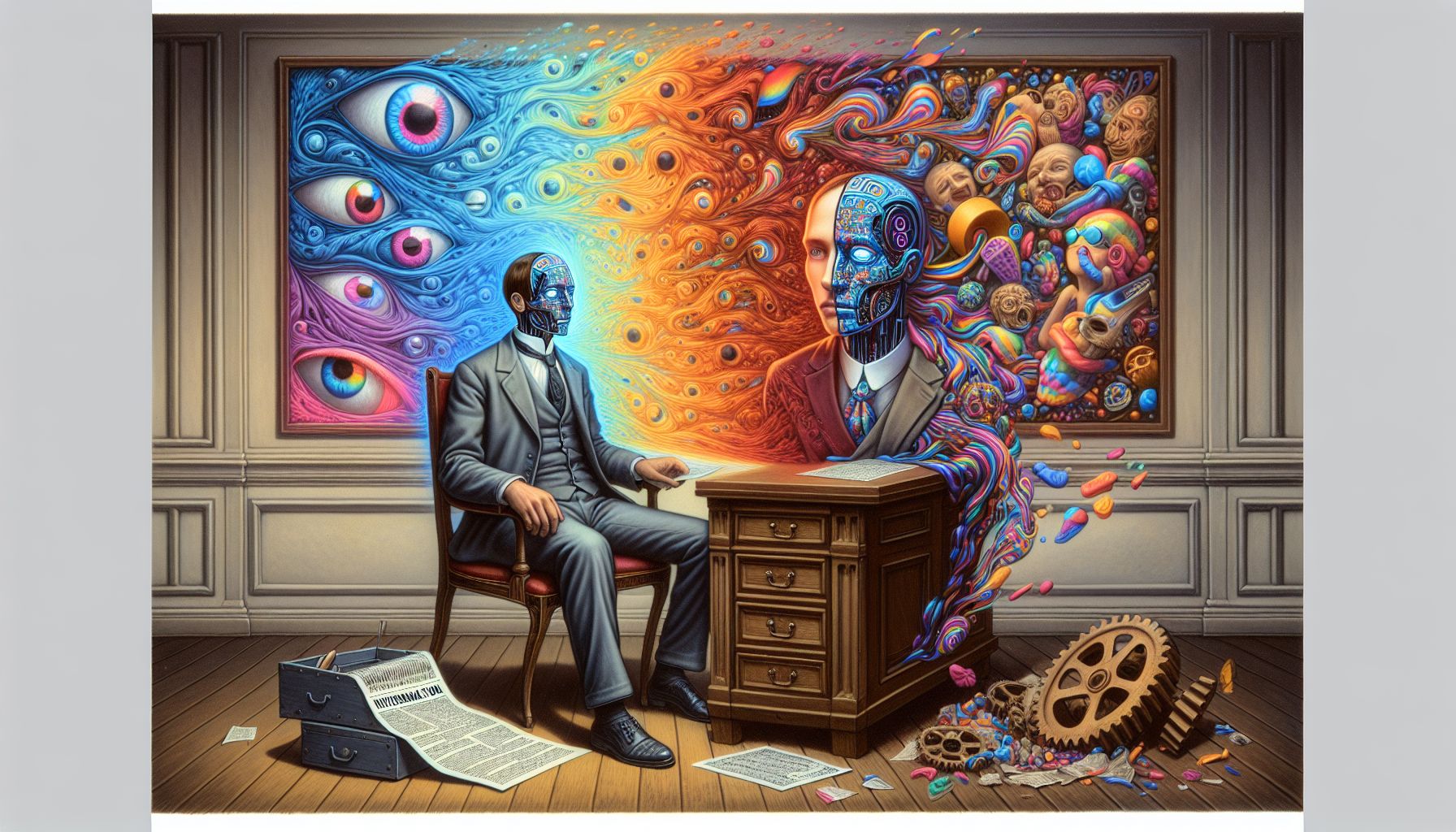

In the exciting world of Artificial Intelligence (AI), we are witnessing phenomenal advancements that are reshaping the future. From self-driving cars to personalized shopping experiences, AI is revolutionizing the way we live, work, and play. However, amid all the buzz, there is a creeping concern that needs our immediate attention - the risks of AI hallucination and misinformation. AI hallucination refers to instances when AI systems misinterpret or incorrectly process data, leading to erroneous outputs. Misinformation, on the other hand, is the propagation of false or inaccurate information, which AI can inadvertently contribute to. This post aims to demystify these complex issues, highlighting the potential risks and suggesting how we can navigate this digital landscape safely.

🎭 The Illusion of AI Hallucination

AI's Mirage: Distinguishing Fact from Fabrication

Imagine a child who mistakes a silhouette in the dark for a scary monster. That’s a simple analogy for AI hallucination. AI systems can sometimes see things that aren’t there, creating false perceptions from the data they analyze. For instance, an AI trained to identify animals might erroneously classify a banana as a penguin due to certain similarities in shape or color. The risks associated with AI hallucination are manifold. It can lead to misdiagnosis in healthcare, misidentification in security systems, and even disastrous mistakes in autonomous driving. But how does this happen? It can be attributed to issues like overfitting, where the AI learns the training data so well that it fails to generalize to new, unseen data.

📰 The Avalanche of AI-Driven Misinformation

The pen is mightier than the sword, and in the digital age, we can say that the algorithm is mightier than the pen. AI-driven misinformation can be likened to an avalanche, starting with a small snowball of false information that rapidly grows in size as it rolls down the digital mountain, accumulating more false data and causing widespread damage. AI tools can unintentionally amplify misinformation by promoting content based on popularity rather than accuracy. For instance, an AI recommendation system might propagate a fake news article simply because it’s being widely read and shared, not because it’s true. Misinformation can also be intentionally spread using AI, such as deepfakes where AI is used to create hyper-realistic but entirely fictional images or videos. The repercussions of AI-driven misinformation are severe. It can lead to political instability, societal discord, and even public health crises. It’s like a wildfire that, once started, is hard to control and can cause irreparable damage.

🛡️ Shielding Against AI Hallucination and Misinformation

Now that we have unmasked the phantom of AI hallucination and the avalanche of misinformation, how do we protect ourselves? Here are some useful strategies:

**Data Diversity

** To prevent AI hallucination, we must ensure that the AI is trained on a diverse and representative dataset. This will help the AI to better generalize and make accurate predictions.

**Algorithmic Transparency

** Understanding how an AI makes decisions can help in identifying and rectifying hallucinations. This requires a move towards more transparent AI systems.

**Fact-checking Mechanisms

** To combat misinformation, we can employ AI-powered fact-checking systems that can verify the authenticity of information before it’s widely disseminated.

**Education and Awareness

** Users need to be educated about the risks of AI hallucination and misinformation. This includes understanding how to critically evaluate AI outputs and fact-check information.

🌐 Regulatory Frameworks and Ethical AI

Beyond individual efforts, establishing strong regulatory frameworks is a crucial step in mitigating the risks of AI hallucination and misinformation. Policymakers need to enforce regulations that mandate transparency in AI systems and penalize the propagation of misinformation. Moreover, we need to promote the development and use of ethical AI. AI should be designed with a set of ethical principles in mind, ensuring that it respects human rights, promotes fairness, and prevents harm. The journey towards ethical AI is a shared responsibility involving governments, tech companies, and users alike.

🧭 Conclusion

In the grand theater of AI, hallucination and misinformation play the villains. However, they are not invincible. They can be tamed with the right strategies, policies, and a collective commitment to ethical AI. As we continue to embrace the transformative power of AI, we also need to be vigilant about these risks. After all, in a world increasingly driven by AI, ensuring the reliability and credibility of our AI systems is not just desirable but absolutely essential. Remember, AI is a tool, and like any tool, its effectiveness depends on how we use it. Let’s use it wisely, responsibly, and ethically, steering clear of the phantom of AI hallucination and the avalanche of misinformation.

🤖 Stay tuned as we decode the future of innovation!