📌 Let’s explore the topic in depth and see what insights we can uncover.

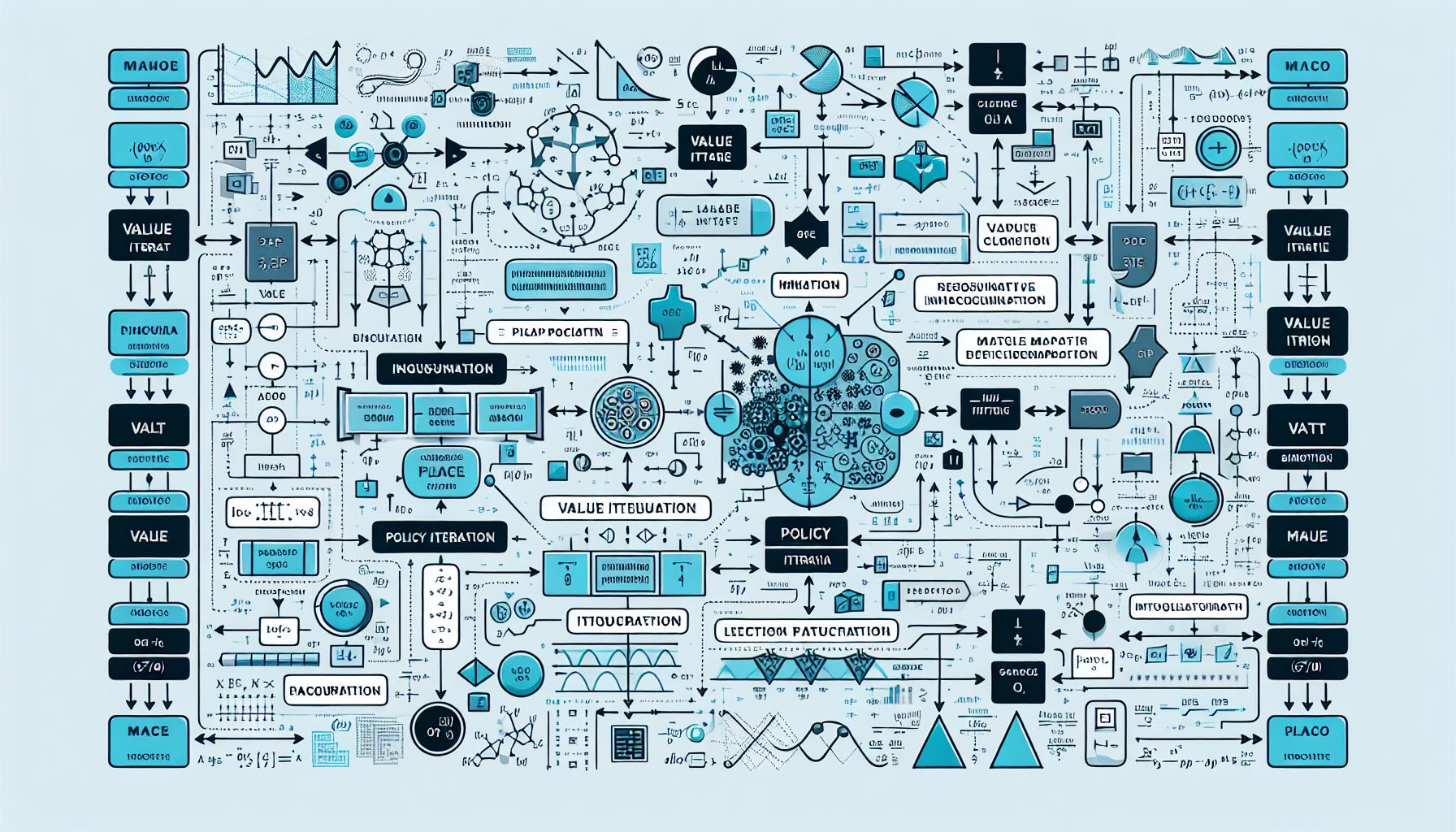

⚡ “Juggling between the efficiency of Value Iteration and the speed of Policy Iteration in Markov Decision Processes (MDPs)? Let’s break down these algorithms and help you become the efficiency guru of your AI model.”

Hello, fellow data enthusiasts! Today, we’re diving into the fascinating world of Markov Decision Processes (MDPs) and two crucial algorithms that help solve them – Value Iteration and Policy Iteration. Let’s take a journey together to demystify these concepts and understand how they work. Imagine you’re in a huge maze with various paths leading to the exit. The twist? Some paths are more rewarding than others. Now, how do you decide which path to take? Sure, you could rely on trial-and-error, but wouldn’t it be more helpful to have a map or a guide? In the realm of reinforcement learning, Value Iteration and Policy 🧩 As for Iteration, they’re those guiding ‘maps’. They help us navigate through the maze of MDPs to find the most rewarding path.

🎯 What are Markov Decision Processes (MDPs)?

"Decoding Complexities: MDP's Iterative Algorithms Unleashed"

Before we jump into the heart of the matter, it’s important to understand what a Markov Decision Process is. In the context of reinforcement learning, MDPs provide a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker.

MDPs are widely used in optimization, operations research, reinforcement learning, and many other fields. They are characterized by:

* A finite set of states, S

* A finite set of actions, A

* A real valued reward function, R(s, a, s')

* A transition probability that determines the probability P(s' | s, a) of landing in state s' when action a is taken in state s

* A discount factor, γ that determines the present value of future rewards.

🔍 Value Iteration: Finding the Best Path

Now, let’s wade into our first algorithm – Value Iteration. The goal here is to find the optimal policy, which is the set of actions that an agent must take in each state so as to maximize the expected cumulative reward. The Value Iteration algorithm works by iteratively updating the value of each state based on the rewards of the possible next states. It continues until the change in values is below a certain threshold (indicating that we have arrived at the most optimal value function). The underlying logic is quite simple: take the action that leads to the maximum expected reward, considering both immediate and future rewards. It’s like choosing the path in the maze that leads to the highest pile of gold, considering both the gold on the path itself and the gold that can be found at the end.

Here’s how the algorithm works, expressed in pseudocode:

### Initialize V(s) = 0 for all states s

### Repeat

### For each state s,

### V(s) = max_a[R(s, a) + γ ∑s' P(s' | s, a) * V(s')]

### Until changes in V(s) are small enough

💡 Policy Iteration: Refining the Route

Policy Iteration, on the other hand, is a bit more refined. It begins with a random policy, then alternates between evaluation of this policy and improvement of it, until an optimal policy is found. The Policy Iteration algorithm might seem more complicated than Value Iteration, but it often converges faster. Think of it as fine-tuning your route through the maze after your first run. You know which paths lead to rewards and which ones lead to dead ends, so you can make more informed decisions.

The pseudocode for the Policy Iteration algorithm is as follows:

### Initialize a random policy π

### Repeat

### Policy Evaluation:

### For each state s,

### V(s) = R(s, π(s)) + γ ∑s' P(s' | s, π(s)) * V(s')

### Policy Improvement:

### For each state s,

π(s) = argmax_a[R(s, a) + γ ∑s' P(s' | s, a) * V(s')]

### Until policy π does not change

🔄 Value Iteration vs Policy Iteration: Which to Choose?

There’s no hard and fast rule about which algorithm to use. Both Value Iteration and Policy Iteration have their own strengths and weaknesses. Value Iteration is straightforward and easier to understand, but it may take longer to converge in large state spaces as it has to operate on all states in each iteration. Policy Iteration, on the other hand, typically converges faster and can be more efficient in large state spaces. However, it involves more computational steps in each iteration and might seem more complex at first glance. The choice between Value Iteration and Policy Iteration often comes down to the specific application and the computational resources available.

🧭 Conclusion

Navigating through the labyrinth of Markov Decision Processes need not be intimidating. With the Value Iteration and Policy Iteration algorithms in your toolkit, you can confidently find your way to the most rewarding path. Remember, Value Iteration is like a brave explorer, taking the path to the maximum immediate reward, while Policy Iteration is like a wise sage, refining its strategy after each journey through the maze.

Armed with these concepts, you’re well on your way to mastering the maze of MDPs. Happy exploring! 🚀

🤖 Stay tuned as we decode the future of innovation!