⚡ “Welcome to the world of artificial intelligence where abstract concepts are truly game-changers. Discover how policy, value function, and Q-function are the dynamic trio orchestrating the AI’s decision-making process!”

Hello, fellow data explorers! 🕵️♂️ Are you ready to embark on yet another exciting journey into the mind-bending world of machine learning? Today, we’re going to delve into the principles of reinforcement learning, specifically policy, value function, and Q-function. Reinforcement learning, a subfield of artificial intelligence, may seem a bit intimidating at first, with its unique nomenclature and concepts. But don’t fret! This guide is here to break it down for you in the simplest terms possible. From the basics of policy, the intricacies of value function, to the workings of Q-function, we’ll cover it all. So put on your learning hats 🎩, because we’re about to dive headfirst into this topic!

🤖 What is Reinforcement Learning?

"Demystifying Complex Concepts in Simple Terms"

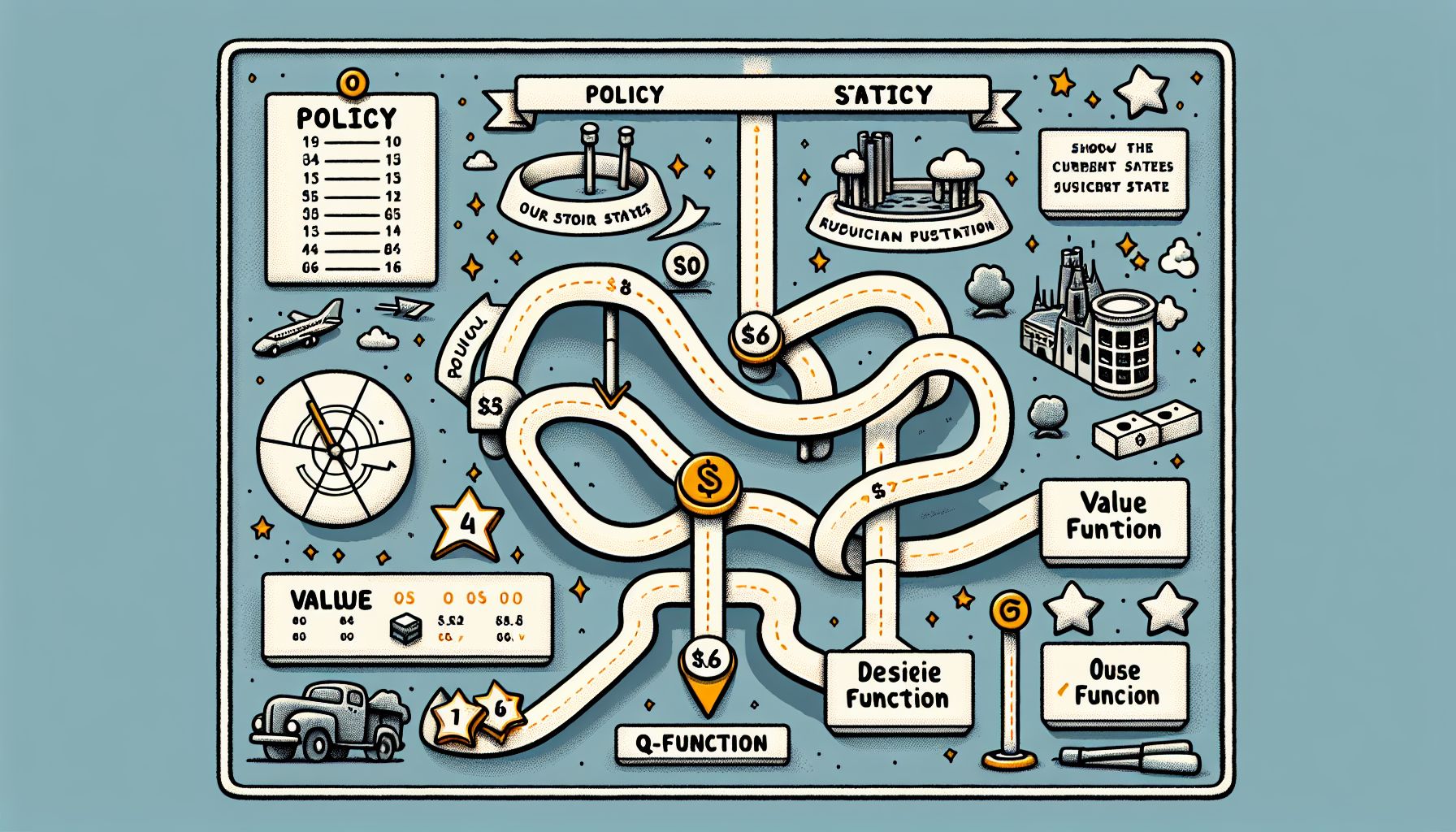

Before we plunge into the specifics, let’s first understand what reinforcement learning (RL) is. RL is a type of machine learning where an agent learns to make decisions by taking actions in an environment to achieve a goal. The agent learns from the consequences of its actions, rather than being taught explicitly. It’s like teaching a dog a new trick; you reward the dog when it does the trick correctly, and over time the dog learns and repeats the trick to receive more rewards. Similarly, in RL, the agent learns optimal behavior based on feedback (reward or penalty). The three main components of reinforcement learning are policy, value function, and Q-function. Let’s get to know each one of them.

📜 Policy in Reinforcement Learning

Picture this: you’re in a new city, and you have a map. Your aim is to reach a specific destination. The policy is essentially your guide or a set of rules you follow to reach your destination. It determines the agent’s behavior at a given time.

In the context of RL, a policy is a strategy that the agent employs to decide the next action based on the current state. It’s denoted as π(a|s), which means the probability of choosing an action a when in a state s.

There are two types of policies:

Deterministic Policy

In this case, the policy will always output the same action for a given state. It’s like having a fixed route to your destination.

Stochastic Policy

Here, the policy outputs a distribution over actions. It’s like having multiple routes to your destination and choosing one based on certain probabilities.

💡 Value Function in Reinforcement Learning

Imagine you’re playing a game of chess. Each move you make is critical in determining whether you’ll win or lose the game. The value function helps you evaluate each move by predicting the expected outcome from each state. In RL, the value function calculates how good a state or an action is for an agent to be in, based on the expected future rewards. It’s a measure of the total amount of reward an agent expects to accumulate over the future, starting from a state or an action. Two main types of value functions are:

State-Value Function (V)

It estimates the expected return from a particular state, following a policy π. It’s denoted as V(s).

Action-Value Function (Q)

It estimates the expected return from a particular state, taking an action, and thereafter following a policy π. It’s denoted as Q(s, a). By obtaining the value function, an agent can determine the most rewarding action to take at each state, helping it make better decisions.

🔍 Q-Function in Reinforcement Learning

Q-function or Q-value, also known as the action-value function, is a term you’ll come across often in RL. It’s like a rating system for each possible action an agent can take in each possible state. The Q-function takes a state-action pair and returns the expected future reward when that action is taken in that state, and thereafter following a policy π. It’s denoted as Q(s, a). The ultimate goal of the agent is to find the optimal Q-function, denoted as Q, which gives the maximum expected future rewards for a state-action pair. The optimal policy π can be derived from Q* by choosing the action that gives the maximum Q-value for each state.

🧭 Conclusion

Voila! Congratulations on making it through this whirlwind tour of policy, value function, and Q-function in reinforcement learning. Remember, the policy is the strategy an agent uses to decide its actions, the value function gauges how good it is for the agent to be in a certain state, and the Q-function provides a rating system for each possible action in each state. Each of these elements plays a crucial role in how an agent learns to navigate its environment and achieve its goal. While it may seem complex at first, reinforcement learning is a fascinating field that combines the thrill of exploration with the precision of mathematics. So don’t be intimidated! Keep exploring, keep learning, and remember, each step you take is a step closer to mastering the art and science of machine learning. Happy learning, folks! 🚀

The future is unfolding — don’t miss what’s next! 📡