📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Ever felt torn between sticking to the tried-and-true or daring to try something new? Dive into the epsilon-greedy strategy, a genius approach in AI that navigates this age-old dilemma.”

Do you remember the thrill of being a child, opening a mysterious box of chocolates, and having to decide between trying a new flavor or sticking with your all-time favorite one? Well, as it turns out, machine learning algorithms face similar dilemmas in their own way. They too, need to balance between exploring new options (exploration) and maximizing rewards from known ones (exploitation). And, one of the most popular approaches they use to solve this dilemma is the Epsilon-Greedy Strategy. In this blog post, we’ll dive deep into the world of reinforcement learning, and explore the epsilon-greedy strategy. We’ll learn how it helps algorithms make decisions that balance exploration and exploitation, contributing to improved learning and performance.

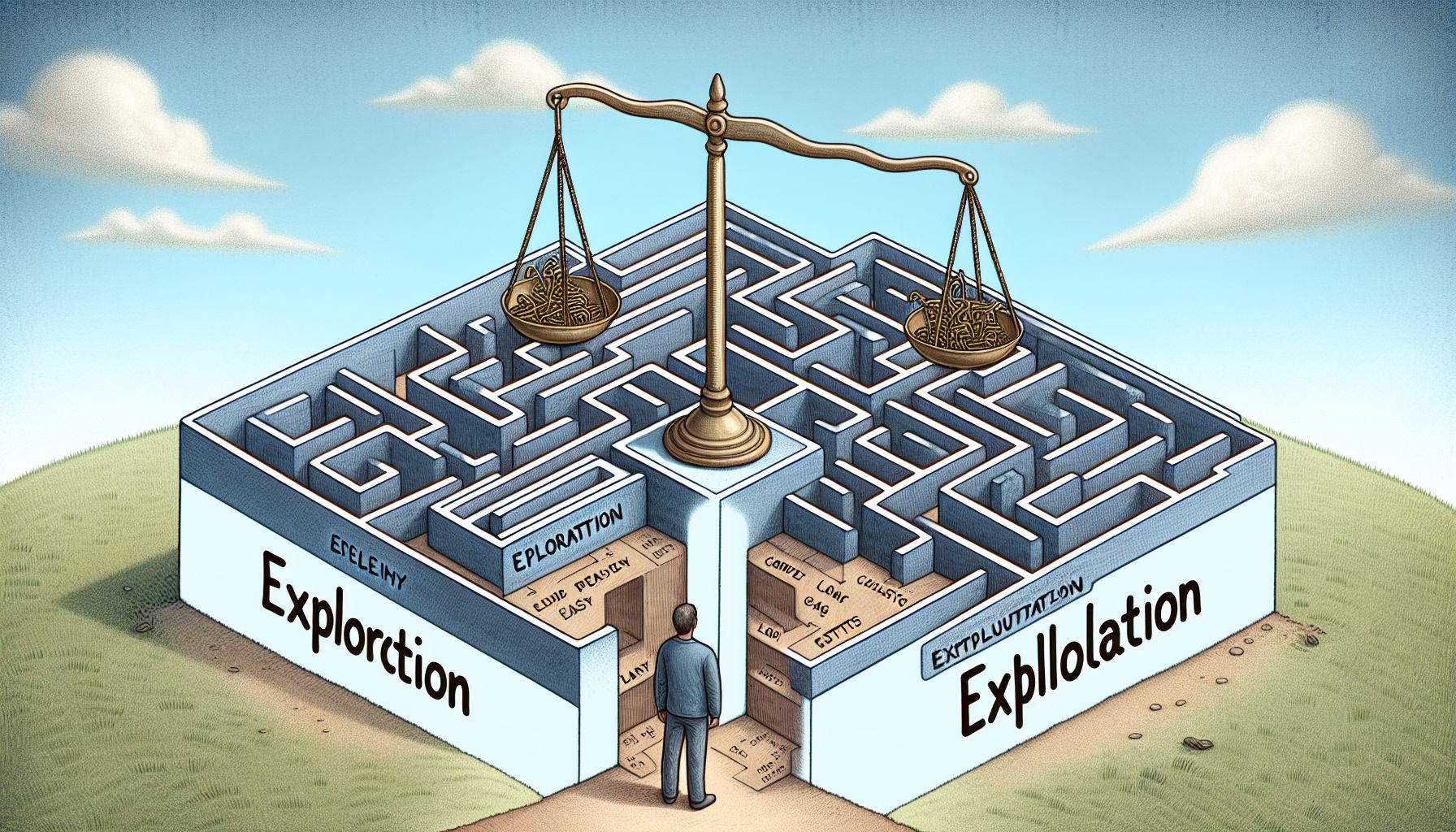

🎯 Understanding the Exploration-Exploitation Trade-off

"Charting Uncharted Territories with Epsilon-Greedy Strategy"

Before we delve into the epsilon-greedy strategy, it is crucial to understand the exploration-exploitation dilemma. Let’s put it in simple terms: 1. Exploration is like wandering into unknown territory. It’s about trying out new things, assessing unknown options, and discovering potential opportunities. The risk? You might stumble upon a pitfall. The reward? You might discover a gold mine. 🌟 2. Exploitation, on the other hand, is akin to sticking with what you know. You’ve found something that works, and you’re milking it for all it’s worth. It’s safe, it’s predictable, and it guarantees a certain level of reward. The downside? You might be missing out on something even better. 🏅 In the context of machine learning, the exploration-exploitation trade-off is a critical aspect of reinforcement learning algorithms. The algorithm has to decide whether to explore new options (which might lead to higher long-term rewards) or exploit known options (which ensures immediate reward).

💡 Epsilon-Greedy Strategy: An Overview

In steps the epsilon-greedy strategy, a simple but effective approach to handle this exploration-exploitation trade-off. Here’s how it works: The algorithm chooses a small value for epsilon (ε), typically between 0.01 and 0.1. — let’s dive into it. For each decision it needs to make, it generates a random number between 0 and 1. — let’s dive into it. If the random number is less than epsilon, the algorithm explores (i.e., it chooses an action at random). — let’s dive into it. If the random number is greater than or equal to epsilon, the algorithm exploits (i.e., it chooses the action that it believes has the highest reward). — let’s dive into it. In other words, the algorithm mainly exploits the best-known option, but every once in a while (with probability ε), it explores a random option. This way, the epsilon-greedy strategy ensures a balance between exploration and exploitation.

🎲 Implementing the Epsilon-Greedy Strategy in Python

Let’s see how we can implement this strategy in Python. Here’s a simple example using a hypothetical slot machine with three arms, each offering a different payout:

import numpy as np

class EpsilonGreedy:

def __init__(self, epsilon, counts=None, values=None):

self.epsilon = epsilon

self.counts = counts

self.values = values

def initialize(self, n_arms):

self.counts = np.zeros(n_arms)

self.values = np.zeros(n_arms)

def select_arm(self):

if np.random.random() > self.epsilon:

return np.argmax(self.values)

else:

return np.random.randint(len(self.values))

def update(self, chosen_arm, reward):

self.counts[chosen_arm] += 1

n = self.counts[chosen_arm]

value = self.values[chosen_arm]

new_value = ((n - 1) / float(n)) * value + (1 / float(n)) * reward

self.values[chosen_arm] = new_value

In this code:

The EpsilonGreedy class has an initialize method that sets up the necessary variables. — let’s dive into it.

The select_arm method chooses an arm to pull based on the epsilon-greedy strategy. — let’s dive into it.

The update method updates the estimate of the reward for the chosen arm based on the received reward. — let’s dive into it.

🌐 Real-World Applications of Epsilon-Greedy Strategy

The epsilon-greedy strategy is not just a theoretical concept. It has a broad range of real-world applications, especially in areas where decision-making under uncertainty is essential. Here are a few examples:

Online Advertising

The epsilon-greedy strategy can be used to select which ad to display to a user. Most of the time, the algorithm will choose the ad with the highest click-through rate (exploitation), but occasionally, it will explore by showing a different ad.

Web Page Design

Web designers can use the epsilon-greedy strategy to test different design elements. Most users will see the current best design, but a few will see a new design, helping to discover if there’s a better option.

Game AI

In games, the epsilon-greedy strategy can be used to balance between following a known strategy (exploitation) and trying out new strategies (exploration).

🧭 Conclusion

The epsilon-greedy strategy provides a simple yet effective solution to the exploration-exploitation dilemma faced by reinforcement learning algorithms. By striking a balance between exploring new options and exploiting known ones, it enables algorithms to learn and adapt effectively in an uncertain environment. While it’s not the only strategy for managing this trade-off (other methods include softmax, UCB, Thompson Sampling, etc.), its simplicity and ease of implementation make it a popular choice. Plus, the thrill of discovery it brings to machine learning is akin to the joy a child feels when trying a new chocolate from that box. Who knows what delightful surprises might be waiting to be discovered? 🎁 So, next time you’re faced with a decision-making task in machine learning, consider the epsilon-greedy strategy. It’s a small step for your algorithm, but it could be a giant leap for your machine learning performance. 🚀

🤖 Stay tuned as we decode the future of innovation!

🔗 Related Articles

- “Decoding Quantum Computing: Implications for Future Technology and Innovation”

- Data Preparation for Supervised Learning, Collecting and cleaning datasets, Train-test split and validation, Feature scaling and normalization, Encoding categorical variables

- Logistic Regression for Classification, Sigmoid function and binary classification, Cost function for logistic regression, Implementing using Python/NumPy, Evaluation: Accuracy, Precision, Recall