📌 Let’s explore the topic in depth and see what insights we can uncover.

⚡ “Is your AI model smarter than a fifth-grader? Learn how the three crucial phases of model training - pretraining, fine-tuning, and instruction tuning - can turn your AI into a top-of-class student.”

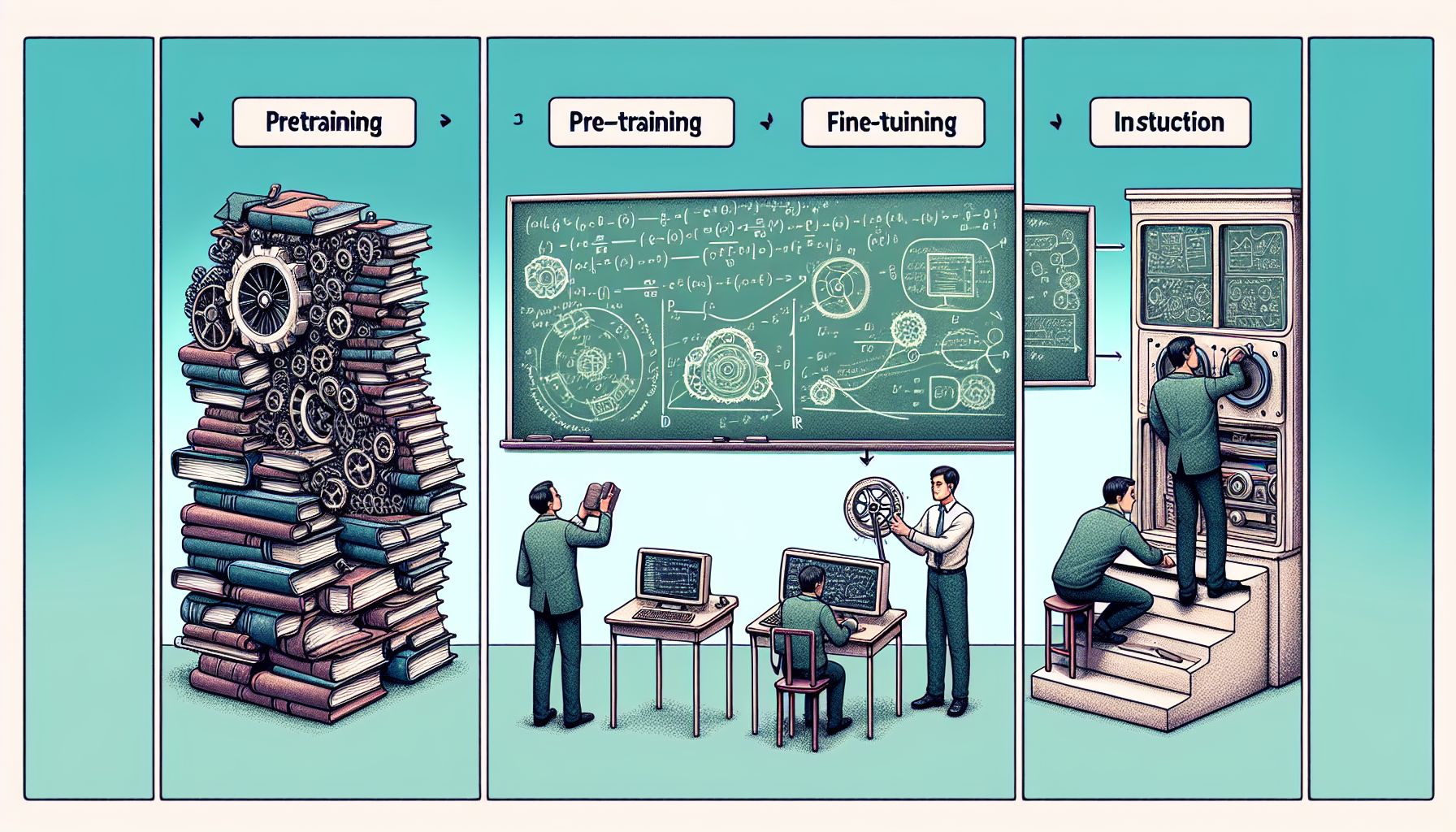

Hello there, fellow data enthusiasts! 🎉 If you’re reading this, you might be embarking on an exciting journey into the heart of machine learning. Or maybe you’re already a seasoned traveler, just stopping by to refresh your memory. Either way, you’ve come to the right place! In this post, we’re going to delve deep into the stages of study model training: pretraining, fine-tuning, and instruction tuning. Model training is like shaping a block of marble into a beautiful statue. 🗿 It might seem daunting at first. But with the right tools and techniques, even the most unyielding chunk of data can be turned into a valuable piece of art. So, grab your sculpting tools, put on your artist’s beret, and let’s get started!

🎨 Pretraining: The Rough Sketch

"Journey through the stages of model training."

Like any great work of art, training a study model starts with a rough sketch, it’s what we call pretraining. In this stage, we start with a general model that can perform a wide variety of tasks. This model is like a jack-of-all-trades, but master of none. The goal of pretraining is to teach the model some basic, generalizable skills. For example, if we’re training a language model, we might start by teaching it to understand the basic grammar and vocabulary of the language. This can be done by feeding the model a large amount of text data and letting it learn from the patterns in the data. In pretraining, we use a method called unsupervised learning. This means the model is left to find patterns in the data on its own, without any guidance from us. It’s like giving a child a bunch of Lego blocks 🧱 and letting them play freely. They might not build a perfect castle right away, but they’ll learn how to fit the blocks together, which is a skill they can use later on.

Here are some key points to remember about pretraining:

It’s the first stage of model training. — let’s dive into it. The model learns generalizable skills from a large amount of data. — let’s dive into it. Unsupervised learning is used in this stage. — let’s dive into it.

🔍 Fine-Tuning: Adding the Details

After the rough sketch comes the detailing. 🔍 Interestingly, the fine-tuning stage. Now that our model has learned some basic skills, it’s time to specialize. In this stage, we tailor the model to perform a specific task. Going back to our language model example, let’s say we want it to translate English text into French. In the fine-tuning stage, we would provide the model with examples of English text and their corresponding French translations. The model would then learn to adjust its parameters to perform this specific task. Fine-tuning is like adding the details to our marble statue. We carefully chisel away at the rough edges and add intricate details to make it look like an authentic piece of art. Each stroke of the chisel brings the statue closer to perfection. Fine-tuning uses a method called supervised learning. 🔍 Interestingly, where we guide the model by providing it with the correct answers or labels for the data. It’s like giving our child a Lego instruction manual 📖 along with the blocks. They still have to put the pieces together, but now they have a clear goal in mind.

Here are some key points to remember about fine-tuning:

It’s the second stage of model training. — let’s dive into it. The model is tailored to perform a specific task. — let’s dive into it. Supervised learning is used in this stage. — let’s dive into it.

🎛️ Instruction Tuning: Perfecting the Masterpiece

Now that we have added the details, it’s time to perfect our sculpture. 🔍 Interestingly, the instruction tuning stage. In this stage, we refine our model’s performance by providing it with specific instructions. Instruction tuning is like the final touches on our statue. We might polish it to make it shine, correct any minor imperfections, or add some flourishes to make it stand out. It’s all about perfecting the masterpiece. In the instruction tuning stage, we might fine-tune the model’s predictions by providing it with explicit instructions, adjust its parameters to reduce overfitting or underfitting, or use techniques like learning rate scheduling or early stopping to improve the model’s performance.

The key points to remember about instruction tuning are:

It’s the final stage of model training. — let’s dive into it. The model’s performance is refined with specific instructions. — let’s dive into it. Techniques like learning rate scheduling and early stopping are often used in this stage. — let’s dive into it.

🧭 Conclusion

Model training is an art. Like sculpting a statue from a block of marble, it involves a process of shaping, refining, and perfecting. We start with a rough sketch in the pretraining stage, add details in the fine-tuning stage, and perfect the masterpiece in the instruction tuning stage. Each stage of model training has its methods and techniques. Pretraining uses unsupervised learning to teach the model generalizable skills. Fine-tuning uses supervised learning to tailor the model to a specific task. And instruction tuning refines the model’s performance with specific instructions. So, whether you’re a novice data artist just starting out or a seasoned sculptor looking to refine your skills, remember: model training is a journey. It may seem daunting at first, but with patience and practice, you can turn your chunk of data into a valuable piece of art. Happy sculpting! 🎨🗿

⚙️ Join us again as we explore the ever-evolving tech landscape.